|

|

Static Public Member Functions | |

| def | getrunnumberfromfilename (filename) |

Public Attributes | |

| bad_files | |

| castorDir | |

| files | |

| filesAndSizes | |

| good_files | |

| lfnDir | |

| maskExists | |

| report | |

Public Attributes inherited from dataset.BaseDataset Public Attributes inherited from dataset.BaseDataset | |

| bad_files | |

| dbsInstance | |

| MM. More... | |

| files | |

| filesAndSizes | |

| good_files | |

| name | |

| pattern | |

| primaryDatasetEntries | |

| MM. More... | |

| report | |

| run_range | |

| user | |

Static Public Attributes | |

| dasData = das_client.get_data(dasQuery, dasLimit) | |

| error = self.__findInJson(jsondict,["data","error"]) | |

| int | i = 0 |

| jsondict = json.loads( dasData ) | |

| string | jsonfile = "das_query_output_%i.txt" |

| jsonfile = jsonfile%i | |

| jsonstr = self.__findInJson(jsondict,"reason") | |

| string | msg = "The DAS query returned an error. The output is very long, and has been stored in:\n" |

| theFile = open( jsonfile, "w" ) | |

Private Member Functions | |

| def | __chunks (self, theList, n) |

| def | __createSnippet (self, jsonPath=None, begin=None, end=None, firstRun=None, lastRun=None, repMap=None, crab=False, parent=False) |

| def | __dateString (self, date) |

| def | __datetime (self, stringForDas) |

| def | __fileListSnippet (self, crab=False, parent=False, firstRun=None, lastRun=None, forcerunselection=False) |

| def | __find_ge (self, a, x) |

| def | __find_lt (self, a, x) |

| def | __findInJson (self, jsondict, strings) |

| def | __getData (self, dasQuery, dasLimit=0) |

| def | __getDataType (self) |

| def | __getFileInfoList (self, dasLimit, parent=False) |

| def | __getMagneticField (self) |

| def | __getMagneticFieldForRun (self, run=-1, tolerance=0.5) |

| def | __getParentDataset (self) |

| def | __getRunList (self) |

| def | __lumiSelectionSnippet (self, jsonPath=None, firstRun=None, lastRun=None) |

Static Private Attributes | |

| tuple | __dummy_source_template |

| __source_template | |

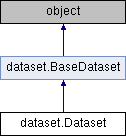

Definition at line 14 of file dataset.py.

| def dataset.Dataset.__init__ | ( | self, | |

| datasetName, | |||

dasLimit = 0, |

|||

tryPredefinedFirst = True, |

|||

cmssw = os.environ["CMSSW_BASE"], |

|||

cmsswrelease = os.environ["CMSSW_RELEASE_BASE"] |

|||

| ) |

Definition at line 16 of file dataset.py.

Referenced by dataset.Dataset.__init__().

| def dataset.Dataset.__init__ | ( | self, | |

| name, | |||

| user, | |||

pattern = '.*root' |

|||

| ) |

Definition at line 264 of file dataset.py.

References dataset.Dataset.__init__().

|

private |

Yield successive n-sized chunks from theList.

Definition at line 79 of file dataset.py.

Referenced by dataset.Dataset.__fileListSnippet(), dataset.Dataset.__lumiSelectionSnippet(), and dataset.Dataset.createdatasetfile_hippy().

|

private |

Definition at line 230 of file dataset.py.

References dataset.Dataset.__dummy_source_template, dataset.Dataset.__fileListSnippet(), dataset.Dataset.__lumiSelectionSnippet(), dataset.Dataset.__source_template, dataset.Dataset.convertTimeToRun(), and dataset.int.

Referenced by dataset.Dataset.__fileListSnippet(), dataset.Dataset.datasetSnippet(), and dataset.Dataset.dump_cff().

|

private |

Definition at line 621 of file dataset.py.

References dataset.Dataset.convertTimeToRun(), and harvestTrackValidationPlots.str.

Referenced by dataset.Dataset.convertTimeToRun().

|

private |

Definition at line 612 of file dataset.py.

References dataset.int.

Referenced by dataset.Dataset.convertTimeToRun().

|

private |

Definition at line 208 of file dataset.py.

References dataset.Dataset.__chunks(), dataset.Dataset.__createSnippet(), dataset.Dataset.fileList(), join(), and list().

Referenced by dataset.Dataset.__createSnippet().

|

private |

|

private |

|

private |

Definition at line 289 of file dataset.py.

References dataset.Dataset.__findInJson().

Referenced by dataset.Dataset.__findInJson(), dataset.Dataset.__getDataType(), dataset.Dataset.__getFileInfoList(), dataset.Dataset.__getMagneticField(), dataset.Dataset.__getMagneticFieldForRun(), dataset.Dataset.__getParentDataset(), dataset.Dataset.__getRunList(), dataset.Dataset.__lumiSelectionSnippet(), dataset.Dataset.convertTimeToRun(), and dataset.Dataset.fileList().

|

private |

Definition at line 341 of file dataset.py.

Referenced by dataset.Dataset.__getDataType(), dataset.Dataset.__getFileInfoList(), dataset.Dataset.__getMagneticField(), dataset.Dataset.__getMagneticFieldForRun(), dataset.Dataset.__getParentDataset(), dataset.Dataset.__getRunList(), and dataset.Dataset.convertTimeToRun().

|

private |

Definition at line 373 of file dataset.py.

References dataset.Dataset.__filename, dataset.Dataset.__findInJson(), dataset.Dataset.__getData(), dataset.Dataset.__name, dataset.Dataset.__predefined, ElectronMVAID.ElectronMVAID.name, counter.Counter.name, average.Average.name, AlignableObjectId::entry.name, histograms.Histograms.name, cond::persistency::RUN_INFO::RUN_NUMBER.name, cond::persistency::TAG::NAME.name, TmModule.name, core.autovars.NTupleVariable.name, cond::persistency::GLOBAL_TAG::NAME.name, cond::persistency::RUN_INFO::START_TIME.name, cond::persistency::TAG::TIME_TYPE.name, cond::persistency::GLOBAL_TAG::VALIDITY.name, cond::persistency::RUN_INFO::END_TIME.name, cond::persistency::TAG::OBJECT_TYPE.name, genericValidation.GenericValidation.name, cond::persistency::GLOBAL_TAG::DESCRIPTION.name, preexistingValidation.PreexistingValidation.name, cond::persistency::TAG::SYNCHRONIZATION.name, cond::persistency::GLOBAL_TAG::RELEASE.name, MEPSet.name, cond::persistency::TAG::END_OF_VALIDITY.name, cond::persistency::GLOBAL_TAG::SNAPSHOT_TIME.name, cond::persistency::GLOBAL_TAG::INSERTION_TIME.name, cond::persistency::TAG::DESCRIPTION.name, cond::persistency::GTEditorData.name, cond::persistency::TAG::LAST_VALIDATED_TIME.name, FWTGeoRecoGeometry::Info.name, Types._Untracked.name, cond::persistency::TAG::INSERTION_TIME.name, dataset.BaseDataset.name, cond::persistency::TAG::MODIFICATION_TIME.name, OutputMEPSet.name, personalPlayback.Applet.name, ParameterSet.name, PixelDCSObject< T >::Item.name, analyzer.Analyzer.name, DQMRivetClient::LumiOption.name, MagCylinder.name, alignment.Alignment.name, ParSet.name, DQMRivetClient::ScaleFactorOption.name, SingleObjectCondition.name, EgHLTOfflineSummaryClient::SumHistBinData.name, cond::persistency::GTProxyData.name, core.autovars.NTupleObjectType.name, MyWatcher.name, Mapper::definition< ScannerT >.name, edm::PathTimingSummary.name, cond::TimeTypeSpecs.name, lumi::TriggerInfo.name, edm::PathSummary.name, cond::persistency::GLOBAL_TAG_MAP::GLOBAL_TAG_NAME.name, DQMGenericClient::EfficOption.name, PixelEndcapLinkMaker::Item.name, perftools::EdmEventSize::BranchRecord.name, FWTableViewManager::TableEntry.name, cond::persistency::GLOBAL_TAG_MAP::RECORD.name, PixelBarrelLinkMaker::Item.name, EcalLogicID.name, cond::persistency::GLOBAL_TAG_MAP::LABEL.name, cond::persistency::GLOBAL_TAG_MAP::TAG_NAME.name, ExpressionHisto< T >.name, XMLProcessor::_loaderBaseConfig.name, cond::persistency::PAYLOAD::HASH.name, TreeCrawler.Package.name, cond::persistency::PAYLOAD::OBJECT_TYPE.name, cond::persistency::PAYLOAD::DATA.name, cond::persistency::PAYLOAD::STREAMER_INFO.name, cond::persistency::PAYLOAD::VERSION.name, MagGeoBuilderFromDDD::volumeHandle.name, cond::persistency::PAYLOAD::INSERTION_TIME.name, options.ConnectionHLTMenu.name, DQMGenericClient::ProfileOption.name, emtf::Node.name, DQMGenericClient::NormOption.name, FastHFShowerLibrary.name, h4DSegm.name, core.TriggerMatchAnalyzer.TriggerMatchAnalyzer.name, PhysicsTools::Calibration::Variable.name, DQMGenericClient::CDOption.name, cond::TagInfo_t.name, CounterChecker.name, EDMtoMEConverter.name, looper.Looper.name, MEtoEDM< T >::MEtoEDMObject.name, cond::persistency::IOV::TAG_NAME.name, cond::persistency::IOV::SINCE.name, TrackerSectorStruct.name, classes.MonitorData.name, cond::persistency::IOV::PAYLOAD_HASH.name, cond::persistency::IOV::INSERTION_TIME.name, HistogramManager.name, MuonGeometrySanityCheckPoint.name, classes.OutputData.name, options.HLTProcessOptions.name, h2DSegm.name, core.TriggerBitAnalyzer.TriggerBitAnalyzer.name, config.Analyzer.name, geometry.Structure.name, core.autovars.NTupleSubObject.name, DQMNet::WaitObject.name, AlpgenParameterName.name, SiStripMonitorDigi.name, core.autovars.NTupleObject.name, config.Service.name, cond::persistency::TAG_LOG::TAG_NAME.name, cond::persistency::TAG_LOG::EVENT_TIME.name, cond::persistency::TAG_LOG::USER_NAME.name, cond::persistency::TAG_LOG::HOST_NAME.name, cond::persistency::TAG_LOG::COMMAND.name, cond::persistency::TAG_LOG::ACTION.name, cond::persistency::TAG_LOG::USER_TEXT.name, core.autovars.NTupleCollection.name, BPHRecoBuilder::BPHRecoSource.name, BPHRecoBuilder::BPHCompSource.name, personalPlayback.FrameworkJob.name, plotscripts.SawTeethFunction.name, hTMaxCell.name, cscdqm::ParHistoDef.name, BeautifulSoup.Tag.name, SummaryOutputProducer::GenericSummary.name, BeautifulSoup.SoupStrainer.name, and python.rootplot.root2matplotlib.replace().

Referenced by dataset.Dataset.dataType().

Definition at line 533 of file dataset.py.

References dataset.Dataset.__fileInfoList, dataset.Dataset.__findInJson(), dataset.Dataset.__getData(), dataset.Dataset.__name, dataset.Dataset.__parentFileInfoList, dataset.Dataset.__predefined, ElectronMVAID.ElectronMVAID.name, counter.Counter.name, average.Average.name, AlignableObjectId::entry.name, histograms.Histograms.name, cond::persistency::RUN_INFO::RUN_NUMBER.name, cond::persistency::TAG::NAME.name, TmModule.name, cond::persistency::GLOBAL_TAG::NAME.name, core.autovars.NTupleVariable.name, cond::persistency::RUN_INFO::START_TIME.name, cond::persistency::TAG::TIME_TYPE.name, cond::persistency::GLOBAL_TAG::VALIDITY.name, genericValidation.GenericValidation.name, cond::persistency::RUN_INFO::END_TIME.name, cond::persistency::TAG::OBJECT_TYPE.name, cond::persistency::GLOBAL_TAG::DESCRIPTION.name, preexistingValidation.PreexistingValidation.name, cond::persistency::TAG::SYNCHRONIZATION.name, cond::persistency::GLOBAL_TAG::RELEASE.name, cond::persistency::GLOBAL_TAG::SNAPSHOT_TIME.name, MEPSet.name, cond::persistency::TAG::END_OF_VALIDITY.name, cond::persistency::GLOBAL_TAG::INSERTION_TIME.name, cond::persistency::TAG::DESCRIPTION.name, cond::persistency::GTEditorData.name, cond::persistency::TAG::LAST_VALIDATED_TIME.name, FWTGeoRecoGeometry::Info.name, Types._Untracked.name, cond::persistency::TAG::INSERTION_TIME.name, dataset.BaseDataset.name, cond::persistency::TAG::MODIFICATION_TIME.name, OutputMEPSet.name, personalPlayback.Applet.name, ParameterSet.name, PixelDCSObject< T >::Item.name, analyzer.Analyzer.name, DQMRivetClient::LumiOption.name, MagCylinder.name, alignment.Alignment.name, ParSet.name, DQMRivetClient::ScaleFactorOption.name, SingleObjectCondition.name, EgHLTOfflineSummaryClient::SumHistBinData.name, cond::persistency::GTProxyData.name, core.autovars.NTupleObjectType.name, MyWatcher.name, Mapper::definition< ScannerT >.name, edm::PathTimingSummary.name, cond::TimeTypeSpecs.name, lumi::TriggerInfo.name, edm::PathSummary.name, cond::persistency::GLOBAL_TAG_MAP::GLOBAL_TAG_NAME.name, DQMGenericClient::EfficOption.name, PixelEndcapLinkMaker::Item.name, perftools::EdmEventSize::BranchRecord.name, FWTableViewManager::TableEntry.name, cond::persistency::GLOBAL_TAG_MAP::RECORD.name, PixelBarrelLinkMaker::Item.name, EcalLogicID.name, cond::persistency::GLOBAL_TAG_MAP::LABEL.name, cond::persistency::GLOBAL_TAG_MAP::TAG_NAME.name, ExpressionHisto< T >.name, XMLProcessor::_loaderBaseConfig.name, TreeCrawler.Package.name, cond::persistency::PAYLOAD::HASH.name, cond::persistency::PAYLOAD::OBJECT_TYPE.name, cond::persistency::PAYLOAD::DATA.name, cond::persistency::PAYLOAD::STREAMER_INFO.name, MagGeoBuilderFromDDD::volumeHandle.name, cond::persistency::PAYLOAD::VERSION.name, cond::persistency::PAYLOAD::INSERTION_TIME.name, options.ConnectionHLTMenu.name, DQMGenericClient::ProfileOption.name, emtf::Node.name, DQMGenericClient::NormOption.name, FastHFShowerLibrary.name, h4DSegm.name, core.TriggerMatchAnalyzer.TriggerMatchAnalyzer.name, PhysicsTools::Calibration::Variable.name, DQMGenericClient::CDOption.name, cond::TagInfo_t.name, CounterChecker.name, EDMtoMEConverter.name, looper.Looper.name, MEtoEDM< T >::MEtoEDMObject.name, cond::persistency::IOV::TAG_NAME.name, cond::persistency::IOV::SINCE.name, TrackerSectorStruct.name, classes.MonitorData.name, cond::persistency::IOV::PAYLOAD_HASH.name, cond::persistency::IOV::INSERTION_TIME.name, HistogramManager.name, MuonGeometrySanityCheckPoint.name, classes.OutputData.name, options.HLTProcessOptions.name, h2DSegm.name, core.TriggerBitAnalyzer.TriggerBitAnalyzer.name, config.Analyzer.name, geometry.Structure.name, core.autovars.NTupleSubObject.name, DQMNet::WaitObject.name, AlpgenParameterName.name, SiStripMonitorDigi.name, core.autovars.NTupleObject.name, config.Service.name, cond::persistency::TAG_LOG::TAG_NAME.name, cond::persistency::TAG_LOG::EVENT_TIME.name, cond::persistency::TAG_LOG::USER_NAME.name, cond::persistency::TAG_LOG::HOST_NAME.name, cond::persistency::TAG_LOG::COMMAND.name, cond::persistency::TAG_LOG::ACTION.name, cond::persistency::TAG_LOG::USER_TEXT.name, core.autovars.NTupleCollection.name, BPHRecoBuilder::BPHRecoSource.name, BPHRecoBuilder::BPHCompSource.name, personalPlayback.FrameworkJob.name, plotscripts.SawTeethFunction.name, hTMaxCell.name, cscdqm::ParHistoDef.name, BeautifulSoup.Tag.name, SummaryOutputProducer::GenericSummary.name, BeautifulSoup.SoupStrainer.name, and dataset.Dataset.parentDataset().

Referenced by dataset.Dataset.fileInfoList().

|

private |

Definition at line 408 of file dataset.py.

References dataset.Dataset.__cmssw, dataset.Dataset.__cmsswrelease, dataset.Dataset.__dataType, dataset.Dataset.__filename, dataset.Dataset.__findInJson(), dataset.Dataset.__getData(), dataset.Dataset.__name, dataset.Dataset.__predefined, python.rootplot.root2matplotlib.replace(), and digi_MixPreMix_cfi.strip.

Referenced by dataset.Dataset.magneticField().

|

private |

For MC, this returns the same as the previous function. For data, it gets the magnetic field from the runs. This is important for deciding which template to use for offlinevalidation

Definition at line 479 of file dataset.py.

References dataset.Dataset.__dataType, dataset.Dataset.__filename, dataset.Dataset.__findInJson(), dataset.Dataset.__firstusedrun, dataset.Dataset.__getData(), dataset.Dataset.__getMagneticFieldForRun(), dataset.Dataset.__lastusedrun, dataset.Dataset.__magneticField, dataset.Dataset.__name, dataset.Dataset.__predefined, funct.abs(), objects.autophobj.float, python.rootplot.root2matplotlib.replace(), split, and digi_MixPreMix_cfi.strip.

Referenced by dataset.Dataset.__getMagneticFieldForRun(), dataset.Dataset.dump_cff(), and dataset.Dataset.magneticFieldForRun().

|

private |

Definition at line 398 of file dataset.py.

References dataset.Dataset.__findInJson(), dataset.Dataset.__getData(), dataset.Dataset.__name, and harvestTrackValidationPlots.str.

Referenced by dataset.Dataset.parentDataset().

|

private |

Definition at line 599 of file dataset.py.

References dataset.Dataset.__findInJson(), dataset.Dataset.__getData(), dataset.Dataset.__name, and dataset.Dataset.__runList.

Referenced by dataset.Dataset.__lumiSelectionSnippet(), dataset.Dataset.convertTimeToRun(), and dataset.Dataset.runList().

|

private |

Definition at line 115 of file dataset.py.

References dataset.Dataset.__chunks(), dataset.Dataset.__findInJson(), dataset.Dataset.__firstusedrun, dataset.Dataset.__getRunList(), dataset.Dataset.__lastusedrun, dataset.Dataset.getForceRunRangeFunction(), dataset.int, join(), list(), hpstanc_transforms.max, min(), python.rootplot.root2matplotlib.replace(), split, and harvestTrackValidationPlots.str.

Referenced by dataset.Dataset.__createSnippet().

| def dataset.Dataset.buildListOfBadFiles | ( | self | ) |

fills the list of bad files from the IntegrityCheck log. When the integrity check file is not available, files are considered as good.

Definition at line 275 of file dataset.py.

| def dataset.Dataset.buildListOfFiles | ( | self, | |

pattern = '.*root' |

|||

| ) |

fills list of files, taking all root files matching the pattern in the castor dir

Definition at line 271 of file dataset.py.

| def dataset.Dataset.convertTimeToRun | ( | self, | |

begin = None, |

|||

end = None, |

|||

firstRun = None, |

|||

lastRun = None, |

|||

shortTuple = True |

|||

| ) |

Definition at line 626 of file dataset.py.

References dataset.Dataset.__dateString(), dataset.Dataset.__datetime(), dataset.Dataset.__find_ge(), dataset.Dataset.__find_lt(), dataset.Dataset.__findInJson(), dataset.Dataset.__getData(), dataset.Dataset.__getRunList(), dataset.Dataset.__name, and dataset.int.

Referenced by dataset.Dataset.__createSnippet(), and dataset.Dataset.__dateString().

| def dataset.Dataset.createdatasetfile_hippy | ( | self, | |

| filename, | |||

| filesperjob, | |||

| firstrun, | |||

| lastrun | |||

| ) |

Definition at line 831 of file dataset.py.

References dataset.Dataset.__chunks(), dataset.Dataset.fileList(), and join().

| def dataset.Dataset.datasetSnippet | ( | self, | |

jsonPath = None, |

|||

begin = None, |

|||

end = None, |

|||

firstRun = None, |

|||

lastRun = None, |

|||

crab = False, |

|||

parent = False |

|||

| ) |

Definition at line 710 of file dataset.py.

References dataset.Dataset.__createSnippet(), dataset.Dataset.__filename, dataset.Dataset.__name, dataset.Dataset.__official, dataset.Dataset.__origName, dataset.Dataset.__predefined, and dataset.Dataset.dump_cff().

Referenced by dataset.Dataset.parentDataset().

| def dataset.Dataset.dataType | ( | self | ) |

Definition at line 691 of file dataset.py.

References dataset.Dataset.__dataType, and dataset.Dataset.__getDataType().

| def dataset.Dataset.dump_cff | ( | self, | |

outName = None, |

|||

jsonPath = None, |

|||

begin = None, |

|||

end = None, |

|||

firstRun = None, |

|||

lastRun = None, |

|||

parent = False |

|||

| ) |

Definition at line 767 of file dataset.py.

References dataset.Dataset.__alreadyStored, dataset.Dataset.__cmssw, dataset.Dataset.__createSnippet(), dataset.Dataset.__dataType, dataset.Dataset.__getMagneticFieldForRun(), dataset.Dataset.__magneticField, dataset.Dataset.__name, python.rootplot.root2matplotlib.replace(), split, harvestTrackValidationPlots.str, and digi_MixPreMix_cfi.strip.

Referenced by dataset.Dataset.datasetSnippet().

| def dataset.Dataset.extractFileSizes | ( | self | ) |

Get the file size for each file, from the eos ls -l command.

Definition at line 306 of file dataset.py.

References dataset.EOSDataset.castorDir, and dataset.Dataset.castorDir.

Definition at line 901 of file dataset.py.

References dataset.Dataset.__dasLimit, and dataset.Dataset.__getFileInfoList().

Referenced by dataset.Dataset.fileList().

| def dataset.Dataset.fileList | ( | self, | |

parent = False, |

|||

firstRun = None, |

|||

lastRun = None, |

|||

forcerunselection = False |

|||

| ) |

Definition at line 863 of file dataset.py.

References dataset.Dataset.__fileList, dataset.Dataset.__findInJson(), dataset.Dataset.__parentFileList, dataset.Dataset.fileInfoList(), objects.autophobj.float, and dataset.Dataset.getrunnumberfromfilename().

Referenced by dataset.Dataset.__fileListSnippet(), and dataset.Dataset.createdatasetfile_hippy().

| def dataset.Dataset.forcerunrange | ( | self, | |

| firstRun, | |||

| lastRun, | |||

| s | |||

| ) |

s must be in the format run1:lum1-run2:lum2

Definition at line 311 of file dataset.py.

References dataset.Dataset.__firstusedrun, dataset.Dataset.__lastusedrun, dataset.int, and split.

Referenced by dataset.Dataset.getForceRunRangeFunction().

| def dataset.Dataset.getForceRunRangeFunction | ( | self, | |

| firstRun, | |||

| lastRun | |||

| ) |

Definition at line 336 of file dataset.py.

References dataset.Dataset.forcerunrange().

Referenced by dataset.Dataset.__lumiSelectionSnippet().

| def dataset.Dataset.getPrimaryDatasetEntries | ( | self | ) |

Definition at line 326 of file dataset.py.

References dataset.int, runall.testit.report, WorkFlowRunner.WorkFlowRunner.report, dataset.BaseDataset.report, and ALIUtils.report.

|

static |

Definition at line 837 of file dataset.py.

References Vispa.Plugins.EdmBrowser.EdmDataAccessor.all(), dataset.int, and join().

Referenced by dataset.Dataset.fileList().

| def dataset.Dataset.magneticField | ( | self | ) |

Definition at line 696 of file dataset.py.

References dataset.Dataset.__getMagneticField(), and dataset.Dataset.__magneticField.

| def dataset.Dataset.magneticFieldForRun | ( | self, | |

run = -1 |

|||

| ) |

Definition at line 701 of file dataset.py.

References dataset.Dataset.__getMagneticFieldForRun().

| def dataset.Dataset.name | ( | self | ) |

Definition at line 904 of file dataset.py.

References dataset.Dataset.__name.

Referenced by config.CFG.__str__(), validation.Sample.digest(), VIDSelectorBase.VIDSelectorBase.initialize(), and Vispa.Views.PropertyView.Property.valueChanged().

| def dataset.Dataset.parentDataset | ( | self | ) |

Definition at line 704 of file dataset.py.

References dataset.Dataset.__getParentDataset(), dataset.Dataset.__parentDataset, and dataset.Dataset.datasetSnippet().

Referenced by dataset.Dataset.__getFileInfoList().

| def dataset.Dataset.predefined | ( | self | ) |

| def dataset.Dataset.printInfo | ( | self | ) |

Definition at line 321 of file dataset.py.

References dataset.EOSDataset.castorDir, dataset.Dataset.castorDir, dataset.Dataset.lfnDir, ElectronMVAID.ElectronMVAID.name, counter.Counter.name, average.Average.name, histograms.Histograms.name, AlignableObjectId::entry.name, TmModule.name, cond::persistency::TAG::NAME.name, cond::persistency::GLOBAL_TAG::NAME.name, core.autovars.NTupleVariable.name, cond::persistency::RUN_INFO::RUN_NUMBER.name, cond::persistency::TAG::TIME_TYPE.name, cond::persistency::GLOBAL_TAG::VALIDITY.name, cond::persistency::RUN_INFO::START_TIME.name, genericValidation.GenericValidation.name, cond::persistency::TAG::OBJECT_TYPE.name, cond::persistency::GLOBAL_TAG::DESCRIPTION.name, cond::persistency::RUN_INFO::END_TIME.name, cond::persistency::TAG::SYNCHRONIZATION.name, preexistingValidation.PreexistingValidation.name, cond::persistency::GLOBAL_TAG::RELEASE.name, MEPSet.name, cond::persistency::TAG::END_OF_VALIDITY.name, cond::persistency::GLOBAL_TAG::SNAPSHOT_TIME.name, cond::persistency::TAG::DESCRIPTION.name, cond::persistency::GTEditorData.name, cond::persistency::GLOBAL_TAG::INSERTION_TIME.name, cond::persistency::TAG::LAST_VALIDATED_TIME.name, FWTGeoRecoGeometry::Info.name, Types._Untracked.name, cond::persistency::TAG::INSERTION_TIME.name, cond::persistency::TAG::MODIFICATION_TIME.name, dataset.BaseDataset.name, OutputMEPSet.name, personalPlayback.Applet.name, ParameterSet.name, PixelDCSObject< T >::Item.name, analyzer.Analyzer.name, DQMRivetClient::LumiOption.name, MagCylinder.name, alignment.Alignment.name, ParSet.name, DQMRivetClient::ScaleFactorOption.name, SingleObjectCondition.name, EgHLTOfflineSummaryClient::SumHistBinData.name, cond::persistency::GTProxyData.name, core.autovars.NTupleObjectType.name, MyWatcher.name, Mapper::definition< ScannerT >.name, edm::PathTimingSummary.name, cond::TimeTypeSpecs.name, lumi::TriggerInfo.name, edm::PathSummary.name, PixelEndcapLinkMaker::Item.name, DQMGenericClient::EfficOption.name, perftools::EdmEventSize::BranchRecord.name, cond::persistency::GLOBAL_TAG_MAP::GLOBAL_TAG_NAME.name, FWTableViewManager::TableEntry.name, PixelBarrelLinkMaker::Item.name, cond::persistency::GLOBAL_TAG_MAP::RECORD.name, EcalLogicID.name, cond::persistency::GLOBAL_TAG_MAP::LABEL.name, ExpressionHisto< T >.name, cond::persistency::GLOBAL_TAG_MAP::TAG_NAME.name, XMLProcessor::_loaderBaseConfig.name, cond::persistency::PAYLOAD::HASH.name, TreeCrawler.Package.name, cond::persistency::PAYLOAD::OBJECT_TYPE.name, cond::persistency::PAYLOAD::DATA.name, cond::persistency::PAYLOAD::STREAMER_INFO.name, MagGeoBuilderFromDDD::volumeHandle.name, cond::persistency::PAYLOAD::VERSION.name, cond::persistency::PAYLOAD::INSERTION_TIME.name, options.ConnectionHLTMenu.name, DQMGenericClient::ProfileOption.name, emtf::Node.name, DQMGenericClient::NormOption.name, FastHFShowerLibrary.name, h4DSegm.name, core.TriggerMatchAnalyzer.TriggerMatchAnalyzer.name, PhysicsTools::Calibration::Variable.name, DQMGenericClient::CDOption.name, cond::TagInfo_t.name, CounterChecker.name, EDMtoMEConverter.name, looper.Looper.name, MEtoEDM< T >::MEtoEDMObject.name, cond::persistency::IOV::TAG_NAME.name, TrackerSectorStruct.name, cond::persistency::IOV::SINCE.name, cond::persistency::IOV::PAYLOAD_HASH.name, classes.MonitorData.name, cond::persistency::IOV::INSERTION_TIME.name, HistogramManager.name, MuonGeometrySanityCheckPoint.name, classes.OutputData.name, options.HLTProcessOptions.name, h2DSegm.name, core.TriggerBitAnalyzer.TriggerBitAnalyzer.name, config.Analyzer.name, geometry.Structure.name, core.autovars.NTupleSubObject.name, DQMNet::WaitObject.name, AlpgenParameterName.name, SiStripMonitorDigi.name, core.autovars.NTupleObject.name, config.Service.name, cond::persistency::TAG_LOG::TAG_NAME.name, cond::persistency::TAG_LOG::EVENT_TIME.name, cond::persistency::TAG_LOG::USER_NAME.name, cond::persistency::TAG_LOG::HOST_NAME.name, cond::persistency::TAG_LOG::COMMAND.name, cond::persistency::TAG_LOG::ACTION.name, cond::persistency::TAG_LOG::USER_TEXT.name, core.autovars.NTupleCollection.name, BPHRecoBuilder::BPHRecoSource.name, BPHRecoBuilder::BPHCompSource.name, personalPlayback.FrameworkJob.name, plotscripts.SawTeethFunction.name, hTMaxCell.name, cscdqm::ParHistoDef.name, BeautifulSoup.Tag.name, SummaryOutputProducer::GenericSummary.name, and BeautifulSoup.SoupStrainer.name.

| def dataset.Dataset.runList | ( | self | ) |

Definition at line 910 of file dataset.py.

References dataset.Dataset.__getRunList(), and dataset.Dataset.__runList.

|

private |

Definition at line 23 of file dataset.py.

Referenced by dataset.Dataset.dump_cff().

|

private |

Definition at line 24 of file dataset.py.

Referenced by dataset.Dataset.__getMagneticField(), and dataset.Dataset.dump_cff().

|

private |

Definition at line 25 of file dataset.py.

Referenced by dataset.Dataset.__getMagneticField().

|

private |

Definition at line 19 of file dataset.py.

Referenced by dataset.Dataset.fileInfoList().

|

private |

Definition at line 76 of file dataset.py.

Referenced by dataset.Dataset.__getMagneticField(), dataset.Dataset.__getMagneticFieldForRun(), dataset.Dataset.dataType(), and dataset.Dataset.dump_cff().

|

staticprivate |

Definition at line 103 of file dataset.py.

Referenced by dataset.Dataset.__createSnippet().

|

private |

Definition at line 21 of file dataset.py.

Referenced by dataset.Dataset.__getFileInfoList().

|

private |

Definition at line 20 of file dataset.py.

Referenced by dataset.Dataset.fileList().

|

private |

Definition at line 53 of file dataset.py.

Referenced by dataset.Dataset.__getDataType(), dataset.Dataset.__getMagneticField(), dataset.Dataset.__getMagneticFieldForRun(), dataset.Dataset.datasetSnippet(), csvReporter.csvReporter.writeRow(), and csvReporter.csvReporter.writeRows().

|

private |

Definition at line 26 of file dataset.py.

Referenced by dataset.Dataset.__getMagneticFieldForRun(), dataset.Dataset.__lumiSelectionSnippet(), and dataset.Dataset.forcerunrange().

|

private |

Definition at line 27 of file dataset.py.

Referenced by dataset.Dataset.__getMagneticFieldForRun(), dataset.Dataset.__lumiSelectionSnippet(), and dataset.Dataset.forcerunrange().

|

private |

Definition at line 77 of file dataset.py.

Referenced by dataset.Dataset.__getMagneticFieldForRun(), dataset.Dataset.dump_cff(), and dataset.Dataset.magneticField().

|

private |

Definition at line 17 of file dataset.py.

Referenced by dataset.Dataset.__getDataType(), dataset.Dataset.__getFileInfoList(), dataset.Dataset.__getMagneticField(), dataset.Dataset.__getMagneticFieldForRun(), dataset.Dataset.__getParentDataset(), dataset.Dataset.__getRunList(), dataset.Dataset.convertTimeToRun(), dataset.Dataset.datasetSnippet(), dataset.Dataset.dump_cff(), Config.Process.dumpConfig(), Config.Process.dumpPython(), dataset.Dataset.name(), and Config.Process.name_().

|

private |

Definition at line 34 of file dataset.py.

Referenced by dataset.Dataset.datasetSnippet().

|

private |

Definition at line 18 of file dataset.py.

Referenced by dataset.Dataset.datasetSnippet().

|

private |

Definition at line 28 of file dataset.py.

Referenced by dataset.Dataset.parentDataset().

|

private |

Definition at line 30 of file dataset.py.

Referenced by dataset.Dataset.__getFileInfoList().

|

private |

Definition at line 29 of file dataset.py.

Referenced by dataset.Dataset.fileList().

|

private |

Definition at line 50 of file dataset.py.

Referenced by dataset.Dataset.__getDataType(), dataset.Dataset.__getFileInfoList(), dataset.Dataset.__getMagneticField(), dataset.Dataset.__getMagneticFieldForRun(), dataset.Dataset.datasetSnippet(), and dataset.Dataset.predefined().

|

private |

Definition at line 22 of file dataset.py.

Referenced by dataset.Dataset.__getRunList(), and dataset.Dataset.runList().

|

staticprivate |

Definition at line 85 of file dataset.py.

Referenced by dataset.Dataset.__createSnippet().

| dataset.Dataset.bad_files |

Definition at line 282 of file dataset.py.

| dataset.Dataset.castorDir |

Definition at line 266 of file dataset.py.

Referenced by dataset.Dataset.extractFileSizes(), and dataset.Dataset.printInfo().

|

static |

Definition at line 342 of file dataset.py.

|

static |

Definition at line 349 of file dataset.py.

Referenced by argparse.ArgumentParser._get_option_tuples(), python.rootplot.argparse.ArgumentParser._get_option_tuples(), argparse.ArgumentParser._parse_known_args(), python.rootplot.argparse.ArgumentParser._parse_known_args(), argparse.ArgumentParser._parse_optional(), python.rootplot.argparse.ArgumentParser._parse_optional(), argparse.ArgumentParser._read_args_from_files(), python.rootplot.argparse.ArgumentParser._read_args_from_files(), argparse.ArgumentParser.add_subparsers(), python.rootplot.argparse.ArgumentParser.add_subparsers(), Page1Parser.Page1Parser.check_for_whole_start_tag(), argparse.ArgumentParser.parse_args(), python.rootplot.argparse.ArgumentParser.parse_args(), argparse.ArgumentParser.parse_known_args(), and python.rootplot.argparse.ArgumentParser.parse_known_args().

| dataset.Dataset.files |

Definition at line 273 of file dataset.py.

| dataset.Dataset.filesAndSizes |

Definition at line 311 of file dataset.py.

| dataset.Dataset.good_files |

Definition at line 283 of file dataset.py.

|

static |

Definition at line 359 of file dataset.py.

|

static |

Definition at line 344 of file dataset.py.

|

static |

Definition at line 358 of file dataset.py.

|

static |

Definition at line 362 of file dataset.py.

|

static |

Definition at line 354 of file dataset.py.

| dataset.Dataset.lfnDir |

Definition at line 265 of file dataset.py.

Referenced by dataset.Dataset.printInfo().

| dataset.Dataset.maskExists |

Definition at line 267 of file dataset.py.

|

static |

Definition at line 366 of file dataset.py.

Referenced by MatrixReader.MatrixException.__str__(), cmsHarvester.Usage.__str__(), and cmsHarvester.Error.__str__().

| dataset.Dataset.report |

Definition at line 268 of file dataset.py.

Referenced by addOnTests.testit.run().

|

static |

Definition at line 363 of file dataset.py.

1.8.11

1.8.11