|

|

Public Member Functions | |

| def | __init__ |

| def | getjobid |

| def | monitor |

| def | run |

Public Member Functions inherited from production_tasks.Task Public Member Functions inherited from production_tasks.Task | |

| def | __init__ |

| def | addOption |

| def | getname |

| def | run |

Additional Inherited Members | |

Public Attributes inherited from production_tasks.Task Public Attributes inherited from production_tasks.Task | |

| dataset | |

| instance | |

| name | |

| options | |

| user | |

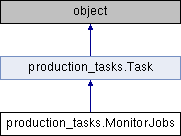

Monitor LSF jobs created with cmsBatch.py. Blocks until all jobs are finished.

Definition at line 575 of file production_tasks.py.

| def production_tasks.MonitorJobs.__init__ | ( | self, | |

| dataset, | |||

| user, | |||

| options | |||

| ) |

Definition at line 577 of file production_tasks.py.

| def production_tasks.MonitorJobs.getjobid | ( | self, | |

| job_dir | |||

| ) |

Parse the LSF output to find the job id

Definition at line 580 of file production_tasks.py.

References mergeVDriftHistosByStation.file, SiPixelLorentzAngle_cfi.read, and split.

Referenced by production_tasks.MonitorJobs.run().

| def production_tasks.MonitorJobs.monitor | ( | self, | |

| jobs, | |||

| previous | |||

| ) |

Definition at line 594 of file production_tasks.py.

References gen.parseHeader(), and split.

Referenced by production_tasks.MonitorJobs.run().

| def production_tasks.MonitorJobs.run | ( | self, | |

| input | |||

| ) |

Definition at line 648 of file production_tasks.py.

References python.multivaluedict.append(), crabWrap.checkStatus(), production_tasks.Task.dataset, CalibratedPatElectronProducer.dataset, CalibratedElectronProducer.dataset, edmIntegrityCheck.IntegrityCheck.dataset, genericValidation.GenericValidationData.dataset, mergeVDriftHistosByStation.file, production_tasks.MonitorJobs.getjobid(), join(), CSCDCCUnpacker.monitor, production_tasks.MonitorJobs.monitor(), ElectronMVAID.ElectronMVAID.name, counter.Counter.name, entry.name, average.Average.name, histograms.Histograms.name, cond::persistency::TAG::NAME.name, core.autovars.NTupleVariable.name, TmModule.name, cond::persistency::GLOBAL_TAG::NAME.name, cond::persistency::TAG::TIME_TYPE.name, genericValidation.GenericValidation.name, cond::persistency::GLOBAL_TAG::VALIDITY.name, cond::persistency::TAG::OBJECT_TYPE.name, preexistingValidation.PreexistingValidation.name, cond::persistency::COND_LOG_TABLE::EXECTIME.name, cond::persistency::GLOBAL_TAG::DESCRIPTION.name, cond::persistency::TAG::SYNCHRONIZATION.name, ora::RecordSpecImpl::Item.name, cond::persistency::COND_LOG_TABLE::IOVTAG.name, cond::persistency::GLOBAL_TAG::RELEASE.name, cond::persistency::TAG::END_OF_VALIDITY.name, cond::persistency::COND_LOG_TABLE::USERTEXT.name, cond::persistency::GLOBAL_TAG::SNAPSHOT_TIME.name, cond::persistency::TAG::DESCRIPTION.name, cond::persistency::GTEditorData.name, cond::persistency::GLOBAL_TAG::INSERTION_TIME.name, cond::persistency::TAG::LAST_VALIDATED_TIME.name, FWTGeoRecoGeometry::Info.name, Types._Untracked.name, cond::persistency::TAG::INSERTION_TIME.name, dataset.BaseDataset.name, cond::persistency::TAG::MODIFICATION_TIME.name, personalPlayback.Applet.name, ParameterSet.name, production_tasks.Task.name, PixelDCSObject< class >::Item.name, analyzer.Analyzer.name, DQMRivetClient::LumiOption.name, MagCylinder.name, ParSet.name, alignment.Alignment.name, DQMRivetClient::ScaleFactorOption.name, SingleObjectCondition.name, EgHLTOfflineSummaryClient::SumHistBinData.name, core.autovars.NTupleObjectType.name, XMLHTRZeroSuppressionLoader::_loaderBaseConfig.name, XMLRBXPedestalsLoader::_loaderBaseConfig.name, cond::persistency::GTProxyData.name, DQMGenericClient::EfficOption.name, MyWatcher.name, edm::PathTimingSummary.name, cond::TimeTypeSpecs.name, lumi::TriggerInfo.name, edm::PathSummary.name, perftools::EdmEventSize::BranchRecord.name, PixelEndcapLinkMaker::Item.name, cond::persistency::GLOBAL_TAG_MAP::GLOBAL_TAG_NAME.name, FWTableViewManager::TableEntry.name, cond::persistency::GLOBAL_TAG_MAP::RECORD.name, PixelBarrelLinkMaker::Item.name, EcalLogicID.name, Mapper::definition< ScannerT >.name, cond::persistency::GLOBAL_TAG_MAP::LABEL.name, McSelector.name, ExpressionHisto< T >.name, cond::persistency::GLOBAL_TAG_MAP::TAG_NAME.name, RecoSelector.name, XMLProcessor::_loaderBaseConfig.name, cond::persistency::PAYLOAD::HASH.name, DQMGenericClient::ProfileOption.name, TreeCrawler.Package.name, cond::persistency::PAYLOAD::OBJECT_TYPE.name, cond::persistency::PAYLOAD::DATA.name, cond::persistency::PAYLOAD::STREAMER_INFO.name, cond::persistency::PAYLOAD::VERSION.name, MagGeoBuilderFromDDD::volumeHandle.name, cond::persistency::PAYLOAD::INSERTION_TIME.name, DQMGenericClient::NormOption.name, options.ConnectionHLTMenu.name, DQMGenericClient::CDOption.name, FastHFShowerLibrary.name, h4DSegm.name, PhysicsTools::Calibration::Variable.name, cond::TagInfo_t.name, EDMtoMEConverter.name, looper.Looper.name, MEtoEDM< T >::MEtoEDMObject.name, cond::persistency::IOV::TAG_NAME.name, TrackerSectorStruct.name, cond::persistency::IOV::SINCE.name, cond::persistency::IOV::PAYLOAD_HASH.name, cond::persistency::IOV::INSERTION_TIME.name, MuonGeometrySanityCheckPoint.name, config.Analyzer.name, config.Service.name, h2DSegm.name, options.HLTProcessOptions.name, core.autovars.NTupleSubObject.name, DQMNet::WaitObject.name, AlpgenParameterName.name, SiStripMonitorDigi.name, core.autovars.NTupleObject.name, cond::persistency::TAG_MIGRATION::SOURCE_ACCOUNT.name, cond::persistency::TAG_MIGRATION::SOURCE_TAG.name, cond::persistency::TAG_MIGRATION::TAG_NAME.name, cond::persistency::TAG_MIGRATION::STATUS_CODE.name, cond::persistency::TAG_MIGRATION::INSERTION_TIME.name, core.autovars.NTupleCollection.name, FastTimerService::LuminosityDescription.name, cond::persistency::PAYLOAD_MIGRATION::SOURCE_ACCOUNT.name, cond::persistency::PAYLOAD_MIGRATION::SOURCE_TOKEN.name, cond::persistency::PAYLOAD_MIGRATION::PAYLOAD_HASH.name, cond::persistency::PAYLOAD_MIGRATION::INSERTION_TIME.name, conddblib.Tag.name, conddblib.GlobalTag.name, personalPlayback.FrameworkJob.name, plotscripts.SawTeethFunction.name, FastTimerService::ProcessDescription.name, hTMaxCell.name, cscdqm::ParHistoDef.name, BeautifulSoup.Tag.name, TiXmlAttribute.name, BeautifulSoup.SoupStrainer.name, FileExportPlugin.FileExportPlugin.options, cmsswPreprocessor.CmsswPreprocessor.options, DOTExport.DotProducer.options, confdb.HLTProcess.options, production_tasks.Task.options, edmIntegrityCheck.IntegrityCheck.options, dataset.BaseDataset.user, EcalTPGParamReaderFromDB.user, production_tasks.Task.user, popcon::RPCObPVSSmapData.user, popcon::RpcDataV.user, popcon::RpcDataT.user, popcon::RpcObGasData.user, popcon::RpcDataGasMix.user, popcon::RpcDataS.user, popcon::RpcDataFebmap.user, popcon::RpcDataI.user, popcon::RpcDataUXC.user, MatrixInjector.MatrixInjector.user, EcalDBConnection.user, and conddblib.TimeType.user.

1.8.5

1.8.5