|

|

Public Member Functions | |

| def | __init__ (self, datasetname, dasinstance=defaultdasinstance) |

| def | __init__ (self, name, user, pattern='.*root') |

| def | buildListOfBadFiles (self) |

| def | buildListOfFiles (self, pattern='.*root') |

| def | extractFileSizes (self) |

| def | getfiles (self, usecache) |

| def | getPrimaryDatasetEntries (self) |

| def | headercomment (self) |

| def | printInfo (self) |

Public Member Functions inherited from dataset.BaseDataset Public Member Functions inherited from dataset.BaseDataset | |

| def | __init__ (self, name, user, pattern='.*root', run_range=None, dbsInstance=None) |

| def init(self, name, user, pattern='. More... | |

| def | buildListOfBadFiles (self) |

| def | buildListOfFiles (self, pattern) |

| def | extractFileSizes (self) |

| def | getPrimaryDatasetEntries (self) |

| def | listOfFiles (self) |

| def | listOfGoodFiles (self) |

| def | listOfGoodFilesWithPrescale (self, prescale) |

| def | printFiles (self, abspath=True, info=True) |

| def | printInfo (self) |

Public Member Functions inherited from dataset.DatasetBase Public Member Functions inherited from dataset.DatasetBase | |

| def | getfiles (self, usecache) |

| def | headercomment (self) |

| def | writefilelist_hippy (self, firstrun, lastrun, runs, eventsperjob, maxevents, outputfile, usecache=True) |

| def | writefilelist_validation (self, firstrun, lastrun, runs, maxevents, outputfile=None, usecache=True) |

Public Attributes | |

| bad_files | |

| castorDir | |

| dasinstance | |

| datasetname | |

| filenamebase | |

| files | |

| filesAndSizes | |

| good_files | |

| lfnDir | |

| maskExists | |

| official | |

| report | |

Public Attributes inherited from dataset.BaseDataset Public Attributes inherited from dataset.BaseDataset | |

| bad_files | |

| dbsInstance | |

| MM. More... | |

| files | |

| filesAndSizes | |

| good_files | |

| name | |

| pattern | |

| primaryDatasetEntries | |

| MM. More... | |

| report | |

| run_range | |

| user | |

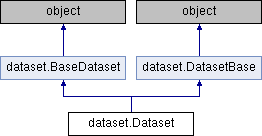

Definition at line 198 of file dataset.py.

| def dataset.Dataset.__init__ | ( | self, | |

| datasetname, | |||

dasinstance = defaultdasinstance |

|||

| ) |

Definition at line 199 of file dataset.py.

Referenced by dataset.Dataset.__init__().

| def dataset.Dataset.__init__ | ( | self, | |

| name, | |||

| user, | |||

pattern = '.*root' |

|||

| ) |

Definition at line 267 of file dataset.py.

References dataset.Dataset.__init__().

| def dataset.Dataset.buildListOfBadFiles | ( | self | ) |

fills the list of bad files from the IntegrityCheck log. When the integrity check file is not available, files are considered as good.

Definition at line 278 of file dataset.py.

| def dataset.Dataset.buildListOfFiles | ( | self, | |

pattern = '.*root' |

|||

| ) |

fills list of files, taking all root files matching the pattern in the castor dir

Definition at line 274 of file dataset.py.

| def dataset.Dataset.extractFileSizes | ( | self | ) |

Get the file size for each file, from the eos ls -l command.

Definition at line 309 of file dataset.py.

References dataset.EOSDataset.castorDir, and dataset.Dataset.castorDir.

| def dataset.Dataset.getfiles | ( | self, | |

| usecache | |||

| ) |

Definition at line 211 of file dataset.py.

References dataset.Dataset.dasinstance, dataset.dasquery(), dataset.Dataset.datasetname, dataset.Dataset.filenamebase, dataset.findinjson(), dataset.int, and print().

| def dataset.Dataset.getPrimaryDatasetEntries | ( | self | ) |

Definition at line 329 of file dataset.py.

References dataset.int, runall.testit.report, WorkFlowRunner.WorkFlowRunner.report, ALIUtils.report, and dataset.BaseDataset.report.

| def dataset.Dataset.headercomment | ( | self | ) |

Definition at line 247 of file dataset.py.

References dataset.Dataset.datasetname.

| def dataset.Dataset.printInfo | ( | self | ) |

Definition at line 324 of file dataset.py.

References dataset.EOSDataset.castorDir, dataset.Dataset.castorDir, dataset.Dataset.lfnDir, ElectronMVAID.ElectronMVAID.name, HFRaddamTask.name, HcalOfflineHarvesting.name, LaserTask.name, HcalOnlineHarvesting.name, NoCQTask.name, PedestalTask.name, QIE10Task.name, QIE11Task.name, RecHitTask.name, UMNioTask.name, ZDCTask.name, AlignableObjectId::entry.name, RawTask.name, average.Average.name, counter.Counter.name, TPTask.name, histograms.Histograms.name, DigiTask.name, LEDTask.name, cond::persistency::TAG::NAME.name, cond::persistency::RUN_INFO::RUN_NUMBER.name, TmModule.name, cond::persistency::GTEditorData.name, cond::persistency::GLOBAL_TAG::NAME.name, cond::persistency::TAG::TIME_TYPE.name, cond::persistency::RUN_INFO::START_TIME.name, cond::persistency::GLOBAL_TAG::VALIDITY.name, cond::persistency::TAG::OBJECT_TYPE.name, cond::persistency::RUN_INFO::END_TIME.name, core.autovars.NTupleVariable.name, cond::persistency::GLOBAL_TAG::DESCRIPTION.name, DQMRivetClient::NormOption.name, cond::persistency::TAG::SYNCHRONIZATION.name, cond::persistency::GLOBAL_TAG::RELEASE.name, cond::persistency::TAG::END_OF_VALIDITY.name, MEPSet.name, cond::persistency::GLOBAL_TAG::SNAPSHOT_TIME.name, cond::persistency::O2O_RUN::JOB_NAME.name, cond::persistency::TAG::DESCRIPTION.name, cms::dd::NameValuePair< T >.name, cond::persistency::GLOBAL_TAG::INSERTION_TIME.name, cond::persistency::O2O_RUN::START_TIME.name, cond::persistency::TAG::LAST_VALIDATED_TIME.name, cond::persistency::O2O_RUN::END_TIME.name, cond::persistency::TAG::INSERTION_TIME.name, FWTGeoRecoGeometry::Info.name, cond::persistency::O2O_RUN::STATUS_CODE.name, cond::persistency::TAG::MODIFICATION_TIME.name, cond::persistency::O2O_RUN::LOG.name, ParameterSet.name, nanoaod::MergeableCounterTable::SingleColumn< T >.name, cond::persistency::TAG::PROTECTION_CODE.name, OutputMEPSet.name, PixelDCSObject< T >::Item.name, dataset.BaseDataset.name, AlignmentConstraint.name, cms::dd::ValuePair< T, U >.name, personalPlayback.Applet.name, Types._Untracked.name, MagCylinder.name, analyzer.Analyzer.name, DQMRivetClient::LumiOption.name, heppy::ParSet.name, cond::persistency::GTProxyData.name, SingleObjectCondition.name, DQMRivetClient::ScaleFactorOption.name, edm::PathTimingSummary.name, cms::DDAlgoArguments.name, EgHLTOfflineSummaryClient::SumHistBinData.name, Barrel.name, cond::TimeTypeSpecs.name, perftools::EdmEventSize::BranchRecord.name, core.autovars.NTupleObjectType.name, EcalLogicID.name, edm::PathSummary.name, lumi::TriggerInfo.name, XMLProcessor::_loaderBaseConfig.name, PixelEndcapLinkMaker::Item.name, MEtoEDM< T >::MEtoEDMObject.name, FWTableViewManager::TableEntry.name, PixelBarrelLinkMaker::Item.name, ExpressionHisto< T >.name, DQMGenericClient::EfficOption.name, Supermodule.name, TreeCrawler.Package.name, cond::persistency::GLOBAL_TAG_MAP::GLOBAL_TAG_NAME.name, options.ConnectionHLTMenu.name, cond::persistency::GLOBAL_TAG_MAP::RECORD.name, cond::persistency::GLOBAL_TAG_MAP::LABEL.name, cms::DDParsingContext::CompositeMaterial.name, cond::persistency::GLOBAL_TAG_MAP::TAG_NAME.name, cond::Tag_t.name, dqmoffline::l1t::HistDefinition.name, DQMGenericClient::ProfileOption.name, magneticfield::BaseVolumeHandle.name, FastHFShowerLibrary.name, nanoaod::MergeableCounterTable::VectorColumn< T >.name, emtf::Node.name, h4DSegm.name, DQMGenericClient::NormOption.name, core.TriggerMatchAnalyzer.TriggerMatchAnalyzer.name, DQMGenericClient::CDOption.name, CounterChecker.name, cond::TagInfo_t.name, TrackerSectorStruct.name, MuonGeometrySanityCheckPoint.name, PhysicsTools::Calibration::Variable.name, DQMGenericClient::NoFlowOption.name, cond::persistency::PAYLOAD::HASH.name, FCDTask.name, EDMtoMEConverter.name, Mapper::definition< ScannerT >.name, looper.Looper.name, cond::persistency::PAYLOAD::OBJECT_TYPE.name, cond::persistency::PAYLOAD::DATA.name, cond::persistency::PAYLOAD::STREAMER_INFO.name, cond::persistency::PAYLOAD::VERSION.name, cond::persistency::PAYLOAD::INSERTION_TIME.name, classes.MonitorData.name, HistogramManager.name, classes.OutputData.name, BPHDecayToResResBuilderBase::DZSelect.name, Crystal.name, h2DSegm.name, options.HLTProcessOptions.name, cond::persistency::IOV::TAG_NAME.name, cond::persistency::IOV::SINCE.name, cond::persistency::IOV::PAYLOAD_HASH.name, cond::persistency::IOV::INSERTION_TIME.name, DQMNet::WaitObject.name, core.TriggerBitAnalyzer.TriggerBitAnalyzer.name, AlpgenParameterName.name, config.Analyzer.name, geometry.Structure.name, core.autovars.NTupleSubObject.name, Capsule.name, core.autovars.NTupleObject.name, Ceramic.name, SiStripMonitorDigi.name, BulkSilicon.name, config.Service.name, APD.name, core.autovars.NTupleCollection.name, BPHRecoBuilder::BPHRecoSource.name, nanoaod::FlatTable::Column.name, BPHRecoBuilder::BPHCompSource.name, StraightTrackAlignment::RPSetPlots.name, cond::persistency::TAG_AUTHORIZATION::TAG_NAME.name, cond::persistency::TAG_AUTHORIZATION::ACCESS_TYPE.name, cond::persistency::TAG_AUTHORIZATION::CREDENTIAL.name, cond::persistency::TAG_AUTHORIZATION::CREDENTIAL_TYPE.name, InnerLayerVolume.name, cond::payloadInspector::TagReference.name, cond::persistency::TAG_LOG::TAG_NAME.name, cond::persistency::TAG_LOG::EVENT_TIME.name, cond::persistency::TAG_LOG::USER_NAME.name, cond::persistency::TAG_LOG::HOST_NAME.name, cond::persistency::TAG_LOG::COMMAND.name, cond::persistency::TAG_LOG::ACTION.name, cond::persistency::TAG_LOG::USER_TEXT.name, personalPlayback.FrameworkJob.name, Grid.name, trklet::TrackletConfigBuilder::DTCinfo.name, Grille.name, BackPipe.name, plotscripts.SawTeethFunction.name, PatchPanel.name, BackCoolTank.name, DryAirTube.name, crabFunctions.CrabTask.name, MBCoolTube.name, MBManif.name, cscdqm::ParHistoDef.name, hTMaxCell.name, SummaryOutputProducer::GenericSummary.name, and print().

| dataset.Dataset.bad_files |

Definition at line 285 of file dataset.py.

| dataset.Dataset.castorDir |

Definition at line 269 of file dataset.py.

Referenced by dataset.Dataset.extractFileSizes(), and dataset.Dataset.printInfo().

| dataset.Dataset.dasinstance |

Definition at line 208 of file dataset.py.

Referenced by dataset.Dataset.getfiles().

| dataset.Dataset.datasetname |

Definition at line 200 of file dataset.py.

Referenced by dataset.Dataset.getfiles(), and dataset.Dataset.headercomment().

| dataset.Dataset.filenamebase |

Definition at line 203 of file dataset.py.

Referenced by dataset.Dataset.getfiles().

| dataset.Dataset.files |

Definition at line 276 of file dataset.py.

| dataset.Dataset.filesAndSizes |

Definition at line 314 of file dataset.py.

| dataset.Dataset.good_files |

Definition at line 286 of file dataset.py.

| dataset.Dataset.lfnDir |

Definition at line 268 of file dataset.py.

Referenced by dataset.Dataset.printInfo().

| dataset.Dataset.maskExists |

Definition at line 270 of file dataset.py.

| dataset.Dataset.official |

Definition at line 202 of file dataset.py.

| dataset.Dataset.report |

Definition at line 271 of file dataset.py.

Referenced by addOnTests.testit.run().

1.8.14

1.8.14