|

|

#include <TritonClient.h>

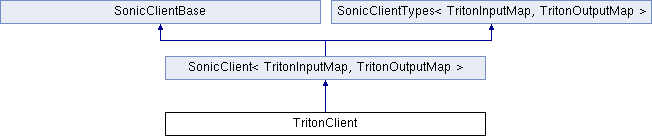

Classes | |

| struct | ServerSideStats |

Public Member Functions | |

| unsigned | batchSize () const |

| bool | noBatch () const |

| void | reset () override |

| TritonServerType | serverType () const |

| bool | setBatchSize (unsigned bsize) |

| void | setUseSharedMemory (bool useShm) |

| TritonClient (const edm::ParameterSet ¶ms, const std::string &debugName) | |

| bool | useSharedMemory () const |

| bool | verbose () const |

| ~TritonClient () override | |

Public Member Functions inherited from SonicClient< TritonInputMap, TritonOutputMap > Public Member Functions inherited from SonicClient< TritonInputMap, TritonOutputMap > | |

| SonicClient (const edm::ParameterSet ¶ms, const std::string &debugName, const std::string &clientName) | |

Public Member Functions inherited from SonicClientBase Public Member Functions inherited from SonicClientBase | |

| const std::string & | clientName () const |

| const std::string & | debugName () const |

| virtual void | dispatch (edm::WaitingTaskWithArenaHolder holder) |

| virtual void | dispatch () |

| SonicMode | mode () const |

| SonicClientBase (const edm::ParameterSet ¶ms, const std::string &debugName, const std::string &clientName) | |

| virtual | ~SonicClientBase ()=default |

Public Member Functions inherited from SonicClientTypes< TritonInputMap, TritonOutputMap > Public Member Functions inherited from SonicClientTypes< TritonInputMap, TritonOutputMap > | |

| Input & | input () |

| const Output & | output () const |

| virtual | ~SonicClientTypes ()=default |

Static Public Member Functions | |

| static void | fillPSetDescription (edm::ParameterSetDescription &iDesc) |

Static Public Member Functions inherited from SonicClientBase Static Public Member Functions inherited from SonicClientBase | |

| static void | fillBasePSetDescription (edm::ParameterSetDescription &desc, bool allowRetry=true) |

Protected Member Functions | |

| void | evaluate () override |

| void | getResults (std::shared_ptr< triton::client::InferResult > results) |

| inference::ModelStatistics | getServerSideStatus () const |

| template<typename F > | |

| bool | handle_exception (F &&call) |

| void | reportServerSideStats (const ServerSideStats &stats) const |

| ServerSideStats | summarizeServerStats (const inference::ModelStatistics &start_status, const inference::ModelStatistics &end_status) const |

Protected Member Functions inherited from SonicClientBase Protected Member Functions inherited from SonicClientBase | |

| void | finish (bool success, std::exception_ptr eptr=std::exception_ptr{}) |

| void | setMode (SonicMode mode) |

| void | start (edm::WaitingTaskWithArenaHolder holder) |

| void | start () |

Protected Attributes | |

| unsigned | batchSize_ |

| std::unique_ptr< triton::client::InferenceServerGrpcClient > | client_ |

| grpc_compression_algorithm | compressionAlgo_ |

| triton::client::Headers | headers_ |

| std::vector< triton::client::InferInput * > | inputsTriton_ |

| unsigned | maxBatchSize_ |

| bool | noBatch_ |

| triton::client::InferOptions | options_ |

| std::vector< const triton::client::InferRequestedOutput * > | outputsTriton_ |

| TritonServerType | serverType_ |

| bool | useSharedMemory_ |

| bool | verbose_ |

Protected Attributes inherited from SonicClientBase Protected Attributes inherited from SonicClientBase | |

| unsigned | allowedTries_ |

| std::string | clientName_ |

| std::string | debugName_ |

| std::unique_ptr< SonicDispatcher > | dispatcher_ |

| std::string | fullDebugName_ |

| std::optional< edm::WaitingTaskWithArenaHolder > | holder_ |

| SonicMode | mode_ |

| unsigned | tries_ |

| bool | verbose_ |

Protected Attributes inherited from SonicClientTypes< TritonInputMap, TritonOutputMap > Protected Attributes inherited from SonicClientTypes< TritonInputMap, TritonOutputMap > | |

| Input | input_ |

| Output | output_ |

Private Member Functions | |

| auto | client () |

Private Attributes | |

| friend | TritonInputData |

| friend | TritonOutputData |

Additional Inherited Members | |

Public Types inherited from SonicClientTypes< TritonInputMap, TritonOutputMap > Public Types inherited from SonicClientTypes< TritonInputMap, TritonOutputMap > | |

| typedef TritonInputMap | Input |

| typedef TritonOutputMap | Output |

Definition at line 19 of file TritonClient.h.

| TritonClient::TritonClient | ( | const edm::ParameterSet & | params, |

| const std::string & | debugName | ||

| ) |

Definition at line 39 of file TritonClient.cc.

References client_, SonicClientBase::fullDebugName_, SonicClientTypes< TritonInputMap, TritonOutputMap >::input_, inputsTriton_, LocalCPU, SiStripPI::max, maxBatchSize_, mps_check::msg, noBatch_, heppy_report::oname, options_, SonicClientTypes< TritonInputMap, TritonOutputMap >::output_, outputsTriton_, submitPVValidationJobs::params, TritonService::pid(), triton_utils::printColl(), contentValuesFiles::server, TritonService::serverInfo(), serverType_, setBatchSize(), SonicClientBase::setMode(), AlCaHLTBitMon_QueryRunRegistry::string, summarizeEdmComparisonLogfiles::success, Sync, TRITON_THROW_IF_ERROR, and verbose_.

|

override |

Definition at line 169 of file TritonClient.cc.

References SonicClientTypes< TritonInputMap, TritonOutputMap >::input_, and SonicClientTypes< TritonInputMap, TritonOutputMap >::output_.

|

inline |

|

inlineprivate |

Definition at line 87 of file TritonClient.h.

References client_.

|

overrideprotectedvirtual |

Implements SonicClientBase.

Definition at line 242 of file TritonClient.cc.

References Async, batchSize_, client_, compressionAlgo_, SonicClientBase::finish(), getResults(), getServerSideStatus(), handle_exception(), headers_, inputsTriton_, SonicClientBase::mode_, options_, SonicClientTypes< TritonInputMap, TritonOutputMap >::output_, outputsTriton_, reportServerSideStats(), mysort::results, dqmMemoryStats::stats, summarizeEdmComparisonLogfiles::success, summarizeServerStats(), TRITON_THROW_IF_ERROR, and verbose().

|

static |

Definition at line 406 of file TritonClient.cc.

References edm::ParameterSetDescription::add(), edm::ParameterSetDescription::addUntracked(), SonicClientBase::fillBasePSetDescription(), and AlCaHLTBitMon_QueryRunRegistry::string.

Referenced by DeepMETSonicProducer::fillDescriptions(), ParticleNetSonicJetTagsProducer::fillDescriptions(), SCEnergyCorrectorDRNProducer::fillDescriptions(), and DRNCorrectionProducerT< T >::fillDescriptions().

|

protected |

Definition at line 225 of file TritonClient.cc.

References noBatch_, heppy_report::oname, SonicClientTypes< TritonInputMap, TritonOutputMap >::output(), SonicClientTypes< TritonInputMap, TritonOutputMap >::output_, mysort::results, and TRITON_THROW_IF_ERROR.

Referenced by evaluate().

|

protected |

Definition at line 395 of file TritonClient.cc.

References client_, options_, TRITON_THROW_IF_ERROR, and verbose_.

Referenced by evaluate().

|

protected |

Definition at line 206 of file TritonClient.cc.

References CMS_SA_ALLOW, MillePedeFileConverter_cfg::e, and SonicClientBase::finish().

Referenced by evaluate().

|

inline |

|

protected |

Definition at line 337 of file TritonClient.cc.

References submitPVResolutionJobs::count, SonicClientBase::debugName_, SonicClientBase::fullDebugName_, mps_check::msg, and dqmMemoryStats::stats.

Referenced by evaluate().

|

overridevirtual |

Reimplemented from SonicClientBase.

Definition at line 196 of file TritonClient.cc.

References SonicClientTypes< TritonInputMap, TritonOutputMap >::input_, and SonicClientTypes< TritonInputMap, TritonOutputMap >::output_.

|

inline |

| bool TritonClient::setBatchSize | ( | unsigned | bsize | ) |

Definition at line 178 of file TritonClient.cc.

References batchSize_, SonicClientBase::fullDebugName_, SonicClientTypes< TritonInputMap, TritonOutputMap >::input_, maxBatchSize_, and SonicClientTypes< TritonInputMap, TritonOutputMap >::output_.

Referenced by TritonClient().

|

inline |

|

protected |

Definition at line 374 of file TritonClient.cc.

References TritonClient::ServerSideStats::compute_infer_time_ns_, TritonClient::ServerSideStats::compute_input_time_ns_, TritonClient::ServerSideStats::compute_output_time_ns_, TritonClient::ServerSideStats::cumm_time_ns_, TritonClient::ServerSideStats::execution_count_, TritonClient::ServerSideStats::inference_count_, TritonClient::ServerSideStats::queue_time_ns_, and TritonClient::ServerSideStats::success_count_.

Referenced by evaluate().

|

inline |

|

inline |

|

protected |

Definition at line 66 of file TritonClient.h.

Referenced by batchSize(), evaluate(), and setBatchSize().

|

protected |

Definition at line 78 of file TritonClient.h.

Referenced by client(), evaluate(), getServerSideStatus(), and TritonClient().

|

protected |

Definition at line 71 of file TritonClient.h.

Referenced by evaluate().

|

protected |

Definition at line 72 of file TritonClient.h.

Referenced by evaluate().

|

protected |

Definition at line 75 of file TritonClient.h.

Referenced by evaluate(), and TritonClient().

|

protected |

Definition at line 65 of file TritonClient.h.

Referenced by setBatchSize(), and TritonClient().

|

protected |

Definition at line 67 of file TritonClient.h.

Referenced by getResults(), noBatch(), and TritonClient().

|

protected |

Definition at line 80 of file TritonClient.h.

Referenced by batchmanager.BatchManager::CheckBatchScript(), evaluate(), getServerSideStatus(), batchmanager.BatchManager::ManageOutputDir(), batchmanager.BatchManager::ParseOptions(), batchmanager.BatchManager::SubmitJob(), batchmanager.BatchManager::SubmitJobs(), and TritonClient().

|

protected |

Definition at line 76 of file TritonClient.h.

Referenced by evaluate(), and TritonClient().

|

protected |

Definition at line 70 of file TritonClient.h.

Referenced by serverType(), and TritonClient().

|

private |

Definition at line 83 of file TritonClient.h.

|

private |

Definition at line 84 of file TritonClient.h.

|

protected |

Definition at line 69 of file TritonClient.h.

Referenced by setUseSharedMemory(), and useSharedMemory().

|

protected |

Definition at line 68 of file TritonClient.h.

Referenced by getServerSideStatus(), TritonClient(), and verbose().

1.8.14

1.8.14