|

|

#include <NTSession.h>

Classes | |

| struct | ExecutorsAndKeys |

| struct | FunctionInfo |

| struct | PerPartitionExecutorsAndLib |

| struct | RunState |

| struct | RunStateArgs |

Public Types | |

| typedef std::function< void(Session *)> | CloseCallback |

| typedef std::vector< std::pair< string, Tensor > > | NamedTensorList |

| typedef std::unordered_map< StringPiece, Node *, StringPieceHasher > | NameNodeMap |

Public Member Functions | |

| ::tensorflow::Status | Close () override |

| ::tensorflow::Status | Create (const GraphDef &graph) override |

| void | ExportCostModels (CostModelManager::CostModelMap *cost_models) |

| ::tensorflow::Status | Extend (const GraphDef &graph) override |

| ::tensorflow::Status | ListDevices (std::vector< DeviceAttributes > *response) override |

| ::tensorflow::Status | LocalDeviceManager (const DeviceMgr **output) override |

| NTSession (const SessionOptions &options, const DeviceMgr *device_mgr, NTSessionFactory *factory) | |

| ::tensorflow::Status | PRun (const string &handle, const NamedTensorList &inputs, const std::vector< string > &output_names, std::vector< Tensor > *outputs) override |

| ::tensorflow::Status | PRunSetup (const std::vector< string > &input_names, const std::vector< string > &output_names, const std::vector< string > &target_nodes, string *handle) override |

| ::tensorflow::Status | Reset (const std::vector< string > &containers) |

| ::tensorflow::Status | Run (const NamedTensorList &inputs, const std::vector< string > &output_names, const std::vector< string > &target_nodes, std::vector< Tensor > *outputs) override |

| ::tensorflow::Status | Run (const ::tensorflow::RunOptions &run_options, const NamedTensorList &inputs, const std::vector< string > &output_names, const std::vector< string > &target_nodes, std::vector< Tensor > *outputs, RunMetadata *run_metadata) override |

| ~NTSession () override | |

Private Member Functions | |

| ::tensorflow::Status | CheckFetch (const std::vector< std::pair< string, Tensor >> &feeds, const std::vector< string > &fetches, const ExecutorsAndKeys *executors_and_keys, const RunState *run_state) |

| ::tensorflow::Status | CheckNotClosed () |

| ::tensorflow::Status | CreateDebuggerState (const DebugOptions &debug_options, int64 session_run_index, int64 executor_step_index, const std::vector< string > &input_names, const std::vector< string > &output_names, const std::vector< string > &target_names, std::unique_ptr< DebuggerStateInterface > *debugger_state) |

| ::tensorflow::Status | CreateGraphs (const BuildGraphOptions &options, std::unordered_map< string, std::unique_ptr< Graph >> *outputs, std::unique_ptr< FunctionLibraryDefinition > *flib_def, RunStateArgs *run_state_args, DataTypeVector *input_types, DataTypeVector *output_types) |

| ::tensorflow::Status | DecorateAndPublishGraphForDebug (const DebugOptions &debug_options, Graph *graph, Device *device) |

| ::tensorflow::Status | ExtendLocked (const GraphDef &graph) EXCLUSIVE_LOCKS_REQUIRED(graph_def_lock_) |

| ::tensorflow::Status | GetOrCreateExecutors (gtl::ArraySlice< string > inputs, gtl::ArraySlice< string > outputs, gtl::ArraySlice< string > target_nodes, ExecutorsAndKeys **executors_and_keys, RunStateArgs *run_state_args) |

| bool graph_created_ | GUARDED_BY (graph_def_lock_) |

| GraphDef graph_def_ | GUARDED_BY (graph_def_lock_) |

| std::vector< std::unique_ptr< FunctionInfo > > functions_ | GUARDED_BY (executor_lock_) |

| std::unordered_map< string, std::shared_ptr< ExecutorsAndKeys > > executors_ | GUARDED_BY (executor_lock_) |

| std::unordered_map< string, std::unique_ptr< RunState > > partial_runs_ | GUARDED_BY (executor_lock_) |

| std::unordered_map< string, string > stateful_placements_ | GUARDED_BY (graph_def_lock_) |

| std::unique_ptr< GraphExecutionState > execution_state_ | GUARDED_BY (graph_def_lock_) |

| bool closed_ | GUARDED_BY (closed_lock_) |

| Status | MaybeInitializeExecutionState (const GraphDef &graph, bool *out_already_initialized) EXCLUSIVE_LOCKS_REQUIRED(graph_def_lock_) |

| ::tensorflow::Status | RecvPRunOutputs (const std::vector< string > &output_names, const ExecutorsAndKeys *executors_and_keys, RunState *run_state, std::vector< Tensor > *outputs) |

| ::tensorflow::Status | ResourceHandleToInputTensor (const Tensor &resource_tensor, Tensor *retrieved_tensor) |

| void | SchedClosure (std::function< void()> c) |

| ::tensorflow::Status | SendPRunInputs (const std::vector< std::pair< string, Tensor >> &inputs, const ExecutorsAndKeys *executors_and_keys, IntraProcessRendezvous *rendez) |

| TF_DISALLOW_COPY_AND_ASSIGN (NTSession) | |

| ::tensorflow::Status | WaitForNotification (Notification *n, int64 timeout_in_ms) |

| void | WaitForNotification (RunState *run_state, CancellationManager *cm, int64 timeout_in_ms) |

Private Attributes | |

| CancellationManager * | cancellation_manager_ |

| mutex | closed_lock_ |

| CostModelManager | cost_model_manager_ |

| const std::unique_ptr< const DeviceMgr > | device_mgr_ |

| DeviceSet | device_set_ |

| std::vector< Device * > | devices_ |

| std::atomic< int64 > | edge_name_counter_ = {0} |

| mutex | executor_lock_ |

| NTSessionFactory *const | factory_ |

| std::unique_ptr< FunctionLibraryDefinition > | flib_def_ |

| mutex | graph_def_lock_ |

| std::atomic< int64 > | handle_name_counter_ = {0} |

| Status | init_error_ |

| Executor::Args::NodeOutputsCallback | node_outputs_callback_ = nullptr |

| const int64 | operation_timeout_in_ms_ = 0 |

| const SessionOptions | options_ |

| string | session_handle_ |

| SessionState | session_state_ |

| bool | sync_on_finish_ = true |

Static Private Attributes | |

| static std::atomic_int_fast64_t | step_id_counter_ |

Friends | |

| class | DebugGateway |

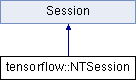

Definition at line 71 of file NTSession.h.

| typedef std::function<void(Session*)> tensorflow::NTSession::CloseCallback |

Definition at line 73 of file NTSession.h.

| typedef std::vector<std::pair<string, Tensor> > tensorflow::NTSession::NamedTensorList |

Definition at line 82 of file NTSession.h.

| typedef std::unordered_map<StringPiece, Node*, StringPieceHasher> tensorflow::NTSession::NameNodeMap |

Definition at line 83 of file NTSession.h.

| tensorflow::NTSession::NTSession | ( | const SessionOptions & | options, |

| const DeviceMgr * | device_mgr, | ||

| NTSessionFactory * | factory | ||

| ) |

Definition at line 191 of file NTSession.cc.

References ztail::d, device_mgr_, device_set_, devices_, dqm::qstatus::ERROR, unpackBuffers-CaloStage2::INFO, LOG, session_handle_, btagGenBb_cfi::Status, mps_update::status, and sync_on_finish_.

|

override |

Definition at line 231 of file NTSession.cc.

References cancellation_manager_, Close(), ztail::d, device_mgr_, flib_def_, and session_handle_.

|

private |

Definition at line 854 of file NTSession.cc.

References executor_lock_, cond::persistency::fetch(), dqmdumpme::first, tensorflow::NTSession::ExecutorsAndKeys::graph, triggerObjects_cff::id, input, cmsLHEtoEOSManager::l, dqmiodumpmetadata::n, tensorflow::NTSession::ExecutorsAndKeys::name_to_node, edm::errors::NotFound, tensorflow::NTSession::RunState::pending_inputs, svgfig::stack, and class-composition::visited.

Referenced by PRun().

|

inlineprivate |

Definition at line 266 of file NTSession.h.

References closed_lock_, CreateDebuggerState(), DecorateAndPublishGraphForDebug(), tensorflow::NTSession::PerPartitionExecutorsAndLib::device, tensorflow::NTSession::PerPartitionExecutorsAndLib::graph, pfDeepBoostedJetPreprocessParams_cfi::input_names, cmsLHEtoEOSManager::l, and btagGenBb_cfi::Status.

Referenced by DecorateAndPublishGraphForDebug(), Extend(), PRun(), and PRunSetup().

|

override |

Definition at line 1325 of file NTSession.cc.

References cancellation_manager_, closed_lock_, tensorflow::NTSessionFactory::Deregister(), factory_, and cmsLHEtoEOSManager::l.

Referenced by ~NTSession().

|

override |

Definition at line 281 of file NTSession.cc.

References ExtendLocked(), graph_def_lock_, init_error_, and cmsLHEtoEOSManager::l.

|

private |

Definition at line 321 of file NTSession.cc.

References pfDeepBoostedJetPreprocessParams_cfi::input_names.

Referenced by CheckNotClosed(), and DecorateAndPublishGraphForDebug().

|

private |

Definition at line 1158 of file NTSession.cc.

References KineDebug3::count(), ztail::d, device_mgr_, device_set_, devices_, edge_name_counter_, flib_def_, tensorflow::NTSession::RunStateArgs::graph, graph_def_lock_, tensorflow::NTSession::RunStateArgs::is_partial_run, cmsLHEtoEOSManager::l, eostools::move(), Skims_PA_cff::name, options_, PatBasicFWLiteJetAnalyzer_Selector_cfg::outputs, ZMuMuAnalysisNtupler_cff::prefix, alignCSCRings::s, btagGenBb_cfi::Status, and std::swap().

Referenced by GetOrCreateExecutors().

|

private |

Definition at line 334 of file NTSession.cc.

References writedatasetfile::args, HltBtagPostValidation_cff::c, cancellation_manager_, CheckNotClosed(), cost_model_manager_, CreateDebuggerState(), device_mgr_, devices_, executor_lock_, tensorflow::NTSession::RunState::executors_done, tensorflow::NTSession::PerPartitionExecutorsAndLib::flib, GetOrCreateExecutors(), tensorflow::NTSession::PerPartitionExecutorsAndLib::graph, graph_def_lock_, mps_fire::i, tensorflow::NTSession::ExecutorsAndKeys::input_name_to_index, tensorflow::NTSession::ExecutorsAndKeys::input_types, PixelMapPlotter::inputs, B2GTnPMonitor_cfi::item, tensorflow::NTSession::ExecutorsAndKeys::items, dqmiolumiharvest::j, cmsLHEtoEOSManager::l, eostools::move(), operation_timeout_in_ms_, options_, tensorflow::NTSession::ExecutorsAndKeys::output_types, PatBasicFWLiteJetAnalyzer_Selector_cfg::outputs, tensorflow::NTSession::RunState::rendez, ResourceHandleToInputTensor(), runTheMatrix::ret, Run(), alignCSCRings::s, SchedClosure(), session_state_, btagGenBb_cfi::Status, tensorflow::NTSession::ExecutorsAndKeys::step_count, step_id_counter_, sync_on_finish_, and WaitForNotification().

Referenced by CheckNotClosed(), and GetOrCreateExecutors().

|

inline |

Definition at line 122 of file NTSession.h.

References cost_model_manager_.

|

override |

Definition at line 293 of file NTSession.cc.

References CheckNotClosed(), ExtendLocked(), graph_def_lock_, and cmsLHEtoEOSManager::l.

|

private |

Definition at line 299 of file NTSession.cc.

References flib_def_, and MaybeInitializeExecutionState().

Referenced by Create(), and Extend().

|

private |

Definition at line 918 of file NTSession.cc.

References CreateGraphs(), tensorflow::NTSession::RunStateArgs::debug_options, DecorateAndPublishGraphForDebug(), device_mgr_, device_set_, executor_lock_, dqmdumpme::first, tensorflow::NTSession::RunStateArgs::graph, graph_def_lock_, cuy::graphs, tensorflow::NTSession::RunStateArgs::handle, handle_name_counter_, mps_fire::i, triggerObjects_cff::id, input, tensorflow::NTSession::RunStateArgs::is_partial_run, B2GTnPMonitor_cfi::item, crabWrapper::key, cmsLHEtoEOSManager::l, mps_check::lib, eostools::move(), dqmiodumpmetadata::n, names, node_outputs_callback_, AlcaSiPixelAliHarvester0T_cff::options, options_, convertSQLitetoXML_cfg::output, CalibrationSummaryClient_cfi::params, and session_handle_.

Referenced by DecorateAndPublishGraphForDebug(), and PRunSetup().

|

private |

|

private |

|

private |

|

private |

|

private |

|

private |

|

private |

|

private |

|

override |

|

inlineoverride |

Definition at line 117 of file NTSession.h.

References device_mgr_.

|

private |

Definition at line 253 of file NTSession.cc.

References device_set_, flib_def_, AlcaSiPixelAliHarvester0T_cff::options, options_, and groupFilesInBlocks::temp.

Referenced by ExtendLocked().

|

override |

Definition at line 659 of file NTSession.cc.

References cancellation_manager_, CheckFetch(), CheckNotClosed(), fileCollector::done, executor_lock_, input, crabWrapper::key, cmsLHEtoEOSManager::l, LOG, tensorflow::NTSession::RunState::mu_, Skims_PA_cff::name, operation_timeout_in_ms_, convertSQLitetoXML_cfg::output, contentValuesFiles::parts, tensorflow::NTSession::RunState::pending_inputs, tensorflow::NTSession::RunState::pending_outputs, tensorflow::NTSession::RunState::PendingDone(), RecvPRunOutputs(), tensorflow::NTSession::RunState::rendez, alignCSCRings::s, SendPRunInputs(), session_state_, btagGenBb_cfi::Status, tensorflow::NTSession::RunState::tensor_store, WaitForNotification(), and hlt_jetmet_dqm_QT_fromfile_cfg::WARNING.

|

override |

Definition at line 592 of file NTSession.cc.

References writedatasetfile::args, HltBtagPostValidation_cff::c, cancellation_manager_, CheckNotClosed(), device_mgr_, devices_, executor_lock_, tensorflow::NTSession::RunState::executors_done, GetOrCreateExecutors(), graph_def_lock_, patZpeak::handle, tensorflow::NTSession::RunStateArgs::handle, tensorflow::NTSession::RunStateArgs::is_partial_run, B2GTnPMonitor_cfi::item, tensorflow::NTSession::ExecutorsAndKeys::items, cmsLHEtoEOSManager::l, eostools::move(), options_, tensorflow::NTSession::RunState::rendez, runTheMatrix::ret, personalPlayback::RunState, SchedClosure(), session_state_, btagGenBb_cfi::Status, step_id_counter_, and sync_on_finish_.

|

private |

Definition at line 813 of file NTSession.cc.

References operation_timeout_in_ms_, tensorflow::NTSession::ExecutorsAndKeys::output_name_to_rendezvous_key, tensorflow::NTSession::RunState::rendez, alignCSCRings::s, and btagGenBb_cfi::Status.

Referenced by PRun().

| tensorflow::Status tensorflow::NTSession::Reset | ( | const std::vector< string > & | containers | ) |

Definition at line 1320 of file NTSession.cc.

References device_mgr_.

|

private |

Definition at line 750 of file NTSession.cc.

References session_state_.

Referenced by DecorateAndPublishGraphForDebug(), and SendPRunInputs().

|

override |

Definition at line 313 of file NTSession.cc.

Referenced by DecorateAndPublishGraphForDebug().

|

override |

|

private |

Definition at line 189 of file NTSession.cc.

References HltBtagPostValidation_cff::c.

Referenced by DecorateAndPublishGraphForDebug(), and PRunSetup().

|

private |

Definition at line 775 of file NTSession.cc.

References input, tensorflow::NTSession::ExecutorsAndKeys::input_name_to_rendezvous_key, ResourceHandleToInputTensor(), alignCSCRings::s, and btagGenBb_cfi::Status.

Referenced by PRun().

|

private |

|

private |

Definition at line 1398 of file NTSession.cc.

References btagGenBb_cfi::Status.

Referenced by DecorateAndPublishGraphForDebug(), PRun(), and WaitForNotification().

|

private |

Definition at line 1383 of file NTSession.cc.

References tensorflow::NTSession::RunState::executors_done, cmsLHEtoEOSManager::l, tensorflow::NTSession::RunState::mu_, btagGenBb_cfi::Status, mps_update::status, and WaitForNotification().

|

friend |

Definition at line 359 of file NTSession.h.

|

private |

Definition at line 321 of file NTSession.h.

Referenced by Close(), DecorateAndPublishGraphForDebug(), PRun(), PRunSetup(), and ~NTSession().

|

private |

Definition at line 338 of file NTSession.h.

Referenced by CheckNotClosed(), and Close().

|

private |

Definition at line 352 of file NTSession.h.

Referenced by DecorateAndPublishGraphForDebug(), and ExportCostModels().

|

private |

Definition at line 288 of file NTSession.h.

Referenced by CreateGraphs(), DecorateAndPublishGraphForDebug(), GetOrCreateExecutors(), LocalDeviceManager(), NTSession(), PRunSetup(), Reset(), and ~NTSession().

|

private |

Definition at line 290 of file NTSession.h.

Referenced by CreateGraphs(), GetOrCreateExecutors(), MaybeInitializeExecutionState(), and NTSession().

|

private |

Definition at line 289 of file NTSession.h.

Referenced by CreateGraphs(), DecorateAndPublishGraphForDebug(), ListDevices(), NTSession(), and PRunSetup().

|

private |

Definition at line 342 of file NTSession.h.

Referenced by CreateGraphs().

|

private |

Definition at line 306 of file NTSession.h.

Referenced by CheckFetch(), DecorateAndPublishGraphForDebug(), GetOrCreateExecutors(), PRun(), and PRunSetup().

|

private |

Definition at line 320 of file NTSession.h.

Referenced by Close().

|

private |

Definition at line 335 of file NTSession.h.

Referenced by CreateGraphs(), ExtendLocked(), MaybeInitializeExecutionState(), and ~NTSession().

|

private |

Definition at line 295 of file NTSession.h.

Referenced by Create(), CreateGraphs(), DecorateAndPublishGraphForDebug(), Extend(), GetOrCreateExecutors(), and PRunSetup().

|

private |

Definition at line 343 of file NTSession.h.

Referenced by GetOrCreateExecutors().

|

private |

Definition at line 298 of file NTSession.h.

Referenced by Create().

|

private |

Definition at line 354 of file NTSession.h.

Referenced by GetOrCreateExecutors().

|

private |

Definition at line 349 of file NTSession.h.

Referenced by DecorateAndPublishGraphForDebug(), PRun(), and RecvPRunOutputs().

|

private |

Definition at line 285 of file NTSession.h.

Referenced by CreateGraphs(), DecorateAndPublishGraphForDebug(), GetOrCreateExecutors(), MaybeInitializeExecutionState(), valtools.webpage::parseArgs(), batchmanager.BatchManager::ParseOptions(), and PRunSetup().

|

private |

Definition at line 292 of file NTSession.h.

Referenced by GetOrCreateExecutors(), NTSession(), and ~NTSession().

|

private |

Definition at line 318 of file NTSession.h.

Referenced by DecorateAndPublishGraphForDebug(), PRun(), PRunSetup(), and ResourceHandleToInputTensor().

|

staticprivate |

Definition at line 346 of file NTSession.h.

Referenced by DecorateAndPublishGraphForDebug(), and PRunSetup().

|

private |

Definition at line 301 of file NTSession.h.

Referenced by DecorateAndPublishGraphForDebug(), NTSession(), and PRunSetup().

1.8.11

1.8.11