|

|

Static Public Member Functions | |

| def | getrunnumberfromfilename (filename) |

Public Attributes | |

| bad_files | |

| castorDir | |

| files | |

| filesAndSizes | |

| good_files | |

| lfnDir | |

| maskExists | |

| report | |

Public Attributes inherited from dataset.BaseDataset Public Attributes inherited from dataset.BaseDataset | |

| bad_files | |

| dbsInstance | |

| MM. More... | |

| files | |

| filesAndSizes | |

| good_files | |

| name | |

| pattern | |

| primaryDatasetEntries | |

| MM. More... | |

| report | |

| run_range | |

| user | |

Private Member Functions | |

| def | __chunks (self, theList, n) |

| def | __createSnippet (self, jsonPath=None, begin=None, end=None, firstRun=None, lastRun=None, repMap=None, crab=False, parent=False) |

| def | __dateString (self, date) |

| def | __datetime (self, stringForDas) |

| def | __fileListSnippet (self, crab=False, parent=False, firstRun=None, lastRun=None, forcerunselection=False) |

| def | __find_ge (self, a, x) |

| def | __find_lt (self, a, x) |

| def | __findInJson (self, jsondict, strings) |

| def | __getData (self, dasQuery, dasLimit=0) |

| def | __getDataType (self) |

| def | __getFileInfoList (self, dasLimit, parent=False) |

| def | __getMagneticField (self) |

| def | __getMagneticFieldForRun (self, run=-1, tolerance=0.5) |

| def | __getParentDataset (self) |

| def | __getRunList (self) |

| def | __lumiSelectionSnippet (self, jsonPath=None, firstRun=None, lastRun=None) |

Static Private Attributes | |

| tuple | __dummy_source_template |

| __source_template | |

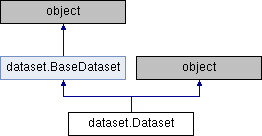

Definition at line 18 of file dataset.py.

| def dataset.Dataset.__init__ | ( | self, | |

| datasetName, | |||

dasLimit = 0, |

|||

tryPredefinedFirst = True, |

|||

cmssw = os.environ["CMSSW_BASE"], |

|||

cmsswrelease = os.environ["CMSSW_RELEASE_BASE"], |

|||

magneticfield = None, |

|||

dasinstance = None |

|||

| ) |

Definition at line 21 of file dataset.py.

Referenced by dataset.Dataset.__init__().

| def dataset.Dataset.__init__ | ( | self, | |

| name, | |||

| user, | |||

pattern = '.*root' |

|||

| ) |

Definition at line 266 of file dataset.py.

References dataset.Dataset.__init__().

|

private |

Yield successive n-sized chunks from theList.

Definition at line 87 of file dataset.py.

Referenced by dataset.Dataset.__fileListSnippet(), dataset.Dataset.__lumiSelectionSnippet(), and dataset.Dataset.createdatasetfile_hippy().

|

private |

Definition at line 243 of file dataset.py.

References dataset.Dataset.__dummy_source_template, dataset.Dataset.__fileListSnippet(), dataset.Dataset.__lumiSelectionSnippet(), dataset.Dataset.__source_template, electrons_cff.bool, dataset.Dataset.convertTimeToRun(), and dataset.int.

Referenced by dataset.Dataset.__fileListSnippet(), dataset.Dataset.datasetSnippet(), and dataset.Dataset.dump_cff().

|

private |

Definition at line 638 of file dataset.py.

References dataset.Dataset.convertTimeToRun(), and str.

Referenced by dataset.Dataset.convertTimeToRun().

|

private |

Definition at line 629 of file dataset.py.

References dataset.int.

Referenced by dataset.Dataset.convertTimeToRun().

|

private |

Definition at line 219 of file dataset.py.

References dataset.Dataset.__chunks(), dataset.Dataset.__createSnippet(), dataset.Dataset.__name, dataset.Dataset.fileList(), join(), and list().

Referenced by dataset.Dataset.__createSnippet().

|

private |

|

private |

|

private |

Definition at line 302 of file dataset.py.

References dataset.Dataset.__findInJson().

Referenced by dataset.Dataset.__findInJson(), dataset.Dataset.__getData(), dataset.Dataset.__getDataType(), dataset.Dataset.__getFileInfoList(), dataset.Dataset.__getMagneticField(), dataset.Dataset.__getMagneticFieldForRun(), dataset.Dataset.__getParentDataset(), dataset.Dataset.__getRunList(), dataset.Dataset.__lumiSelectionSnippet(), dataset.Dataset.convertTimeToRun(), and dataset.Dataset.fileList().

|

private |

Definition at line 354 of file dataset.py.

References dataset.Dataset.__findInJson(), and str.

Referenced by dataset.Dataset.__getDataType(), dataset.Dataset.__getFileInfoList(), dataset.Dataset.__getMagneticField(), dataset.Dataset.__getMagneticFieldForRun(), dataset.Dataset.__getParentDataset(), dataset.Dataset.__getRunList(), and dataset.Dataset.convertTimeToRun().

|

private |

Definition at line 386 of file dataset.py.

References dataset.Dataset.__dasinstance, dataset.Dataset.__filename, dataset.Dataset.__findInJson(), dataset.Dataset.__getData(), dataset.Dataset.__name, dataset.Dataset.__predefined, ElectronMVAID.ElectronMVAID.name, counter.Counter.name, average.Average.name, histograms.Histograms.name, AlignableObjectId::entry.name, cond::persistency::RUN_INFO::RUN_NUMBER.name, cond::persistency::TAG::NAME.name, TmModule.name, cond::persistency::GLOBAL_TAG::NAME.name, core.autovars.NTupleVariable.name, cond::persistency::GLOBAL_TAG::VALIDITY.name, cond::persistency::RUN_INFO::START_TIME.name, cond::persistency::TAG::TIME_TYPE.name, cond::persistency::GLOBAL_TAG::DESCRIPTION.name, cond::persistency::TAG::OBJECT_TYPE.name, cond::persistency::RUN_INFO::END_TIME.name, cond::persistency::GLOBAL_TAG::RELEASE.name, cond::persistency::TAG::SYNCHRONIZATION.name, cond::persistency::GLOBAL_TAG::SNAPSHOT_TIME.name, MEPSet.name, cond::persistency::TAG::END_OF_VALIDITY.name, cond::persistency::GLOBAL_TAG::INSERTION_TIME.name, cond::persistency::TAG::DESCRIPTION.name, cond::persistency::GTEditorData.name, nanoaod::MergeableCounterTable::SingleColumn< T >.name, cond::persistency::TAG::LAST_VALIDATED_TIME.name, cond::persistency::TAG::INSERTION_TIME.name, preexistingValidation.PreexistingValidation.name, cond::persistency::TAG::MODIFICATION_TIME.name, FWTGeoRecoGeometry::Info.name, Types._Untracked.name, OutputMEPSet.name, dataset.BaseDataset.name, personalPlayback.Applet.name, ParameterSet.name, PixelDCSObject< T >::Item.name, analyzer.Analyzer.name, DQMRivetClient::LumiOption.name, MagCylinder.name, ParSet.name, DQMRivetClient::ScaleFactorOption.name, EgHLTOfflineSummaryClient::SumHistBinData.name, SingleObjectCondition.name, cond::persistency::GTProxyData.name, core.autovars.NTupleObjectType.name, MyWatcher.name, edm::PathTimingSummary.name, nanoaod::MergeableCounterTable::VectorColumn< T >.name, cms::DDAlgoArguments.name, cond::TimeTypeSpecs.name, lumi::TriggerInfo.name, alignment.Alignment.name, edm::PathSummary.name, cond::persistency::GLOBAL_TAG_MAP::GLOBAL_TAG_NAME.name, PixelEndcapLinkMaker::Item.name, perftools::EdmEventSize::BranchRecord.name, DQMGenericClient::EfficOption.name, FWTableViewManager::TableEntry.name, cond::persistency::GLOBAL_TAG_MAP::RECORD.name, PixelBarrelLinkMaker::Item.name, EcalLogicID.name, validateAlignments.ParallelMergeJob.name, cond::persistency::GLOBAL_TAG_MAP::LABEL.name, MEtoEDM< T >::MEtoEDMObject.name, cond::persistency::GLOBAL_TAG_MAP::TAG_NAME.name, ExpressionHisto< T >.name, XMLProcessor::_loaderBaseConfig.name, cond::persistency::PAYLOAD::HASH.name, cond::persistency::PAYLOAD::OBJECT_TYPE.name, genericValidation.GenericValidation.name, TreeCrawler.Package.name, cond::persistency::PAYLOAD::DATA.name, cond::persistency::PAYLOAD::STREAMER_INFO.name, cond::persistency::PAYLOAD::VERSION.name, MagGeoBuilderFromDDD::volumeHandle.name, cond::persistency::PAYLOAD::INSERTION_TIME.name, options.ConnectionHLTMenu.name, DQMGenericClient::ProfileOption.name, dqmoffline::l1t::HistDefinition.name, DQMGenericClient::NormOption.name, emtf::Node.name, h4DSegm.name, core.TriggerMatchAnalyzer.TriggerMatchAnalyzer.name, FastHFShowerLibrary.name, PhysicsTools::Calibration::Variable.name, DQMGenericClient::CDOption.name, CounterChecker.name, cond::TagInfo_t.name, looper.Looper.name, DQMGenericClient::NoFlowOption.name, cond::persistency::IOV::TAG_NAME.name, TrackerSectorStruct.name, cond::persistency::IOV::SINCE.name, EDMtoMEConverter.name, cond::persistency::IOV::PAYLOAD_HASH.name, Mapper::definition< ScannerT >.name, classes.MonitorData.name, cond::persistency::IOV::INSERTION_TIME.name, HistogramManager.name, MuonGeometrySanityCheckPoint.name, classes.OutputData.name, options.HLTProcessOptions.name, h2DSegm.name, core.TriggerBitAnalyzer.TriggerBitAnalyzer.name, nanoaod::FlatTable::Column.name, geometry.Structure.name, config.Analyzer.name, core.autovars.NTupleSubObject.name, DQMNet::WaitObject.name, AlpgenParameterName.name, SiStripMonitorDigi.name, core.autovars.NTupleObject.name, config.Service.name, cond::persistency::TAG_LOG::TAG_NAME.name, cond::persistency::TAG_LOG::EVENT_TIME.name, cond::persistency::TAG_LOG::USER_NAME.name, cond::persistency::TAG_LOG::HOST_NAME.name, cond::persistency::TAG_LOG::COMMAND.name, cond::persistency::TAG_LOG::ACTION.name, cond::persistency::TAG_LOG::USER_TEXT.name, core.autovars.NTupleCollection.name, BPHRecoBuilder::BPHRecoSource.name, BPHRecoBuilder::BPHCompSource.name, personalPlayback.FrameworkJob.name, plotscripts.SawTeethFunction.name, crabFunctions.CrabTask.name, hTMaxCell.name, cscdqm::ParHistoDef.name, BeautifulSoup.Tag.name, SummaryOutputProducer::GenericSummary.name, BeautifulSoup.SoupStrainer.name, and python.rootplot.root2matplotlib.replace().

Referenced by dataset.Dataset.dataType().

Definition at line 559 of file dataset.py.

References dataset.Dataset.__dasinstance, dataset.Dataset.__findInJson(), dataset.Dataset.__getData(), dataset.Dataset.__name, dataset.Dataset.__predefined, ElectronMVAID.ElectronMVAID.name, counter.Counter.name, average.Average.name, AlignableObjectId::entry.name, histograms.Histograms.name, cond::persistency::RUN_INFO::RUN_NUMBER.name, cond::persistency::TAG::NAME.name, TmModule.name, cond::persistency::GLOBAL_TAG::NAME.name, core.autovars.NTupleVariable.name, cond::persistency::GLOBAL_TAG::VALIDITY.name, cond::persistency::RUN_INFO::START_TIME.name, cond::persistency::TAG::TIME_TYPE.name, cond::persistency::GLOBAL_TAG::DESCRIPTION.name, cond::persistency::TAG::OBJECT_TYPE.name, cond::persistency::RUN_INFO::END_TIME.name, cond::persistency::GLOBAL_TAG::RELEASE.name, cond::persistency::TAG::SYNCHRONIZATION.name, cond::persistency::GLOBAL_TAG::SNAPSHOT_TIME.name, MEPSet.name, cond::persistency::TAG::END_OF_VALIDITY.name, cond::persistency::GLOBAL_TAG::INSERTION_TIME.name, cond::persistency::TAG::DESCRIPTION.name, cond::persistency::GTEditorData.name, nanoaod::MergeableCounterTable::SingleColumn< T >.name, cond::persistency::TAG::LAST_VALIDATED_TIME.name, cond::persistency::TAG::INSERTION_TIME.name, cond::persistency::TAG::MODIFICATION_TIME.name, FWTGeoRecoGeometry::Info.name, preexistingValidation.PreexistingValidation.name, Types._Untracked.name, OutputMEPSet.name, dataset.BaseDataset.name, personalPlayback.Applet.name, ParameterSet.name, PixelDCSObject< T >::Item.name, analyzer.Analyzer.name, DQMRivetClient::LumiOption.name, MagCylinder.name, ParSet.name, DQMRivetClient::ScaleFactorOption.name, EgHLTOfflineSummaryClient::SumHistBinData.name, SingleObjectCondition.name, cond::persistency::GTProxyData.name, core.autovars.NTupleObjectType.name, MyWatcher.name, edm::PathTimingSummary.name, nanoaod::MergeableCounterTable::VectorColumn< T >.name, cms::DDAlgoArguments.name, cond::TimeTypeSpecs.name, lumi::TriggerInfo.name, alignment.Alignment.name, edm::PathSummary.name, cond::persistency::GLOBAL_TAG_MAP::GLOBAL_TAG_NAME.name, PixelEndcapLinkMaker::Item.name, perftools::EdmEventSize::BranchRecord.name, DQMGenericClient::EfficOption.name, FWTableViewManager::TableEntry.name, cond::persistency::GLOBAL_TAG_MAP::RECORD.name, PixelBarrelLinkMaker::Item.name, EcalLogicID.name, validateAlignments.ParallelMergeJob.name, cond::persistency::GLOBAL_TAG_MAP::LABEL.name, MEtoEDM< T >::MEtoEDMObject.name, cond::persistency::GLOBAL_TAG_MAP::TAG_NAME.name, ExpressionHisto< T >.name, XMLProcessor::_loaderBaseConfig.name, cond::persistency::PAYLOAD::HASH.name, cond::persistency::PAYLOAD::OBJECT_TYPE.name, genericValidation.GenericValidation.name, TreeCrawler.Package.name, cond::persistency::PAYLOAD::DATA.name, cond::persistency::PAYLOAD::STREAMER_INFO.name, cond::persistency::PAYLOAD::VERSION.name, MagGeoBuilderFromDDD::volumeHandle.name, cond::persistency::PAYLOAD::INSERTION_TIME.name, options.ConnectionHLTMenu.name, DQMGenericClient::ProfileOption.name, dqmoffline::l1t::HistDefinition.name, DQMGenericClient::NormOption.name, emtf::Node.name, h4DSegm.name, core.TriggerMatchAnalyzer.TriggerMatchAnalyzer.name, PhysicsTools::Calibration::Variable.name, FastHFShowerLibrary.name, DQMGenericClient::CDOption.name, CounterChecker.name, cond::TagInfo_t.name, looper.Looper.name, DQMGenericClient::NoFlowOption.name, cond::persistency::IOV::TAG_NAME.name, TrackerSectorStruct.name, EDMtoMEConverter.name, cond::persistency::IOV::SINCE.name, Mapper::definition< ScannerT >.name, cond::persistency::IOV::PAYLOAD_HASH.name, classes.MonitorData.name, cond::persistency::IOV::INSERTION_TIME.name, HistogramManager.name, MuonGeometrySanityCheckPoint.name, classes.OutputData.name, options.HLTProcessOptions.name, h2DSegm.name, core.TriggerBitAnalyzer.TriggerBitAnalyzer.name, nanoaod::FlatTable::Column.name, config.Analyzer.name, geometry.Structure.name, core.autovars.NTupleSubObject.name, DQMNet::WaitObject.name, AlpgenParameterName.name, SiStripMonitorDigi.name, core.autovars.NTupleObject.name, config.Service.name, cond::persistency::TAG_LOG::TAG_NAME.name, cond::persistency::TAG_LOG::EVENT_TIME.name, cond::persistency::TAG_LOG::USER_NAME.name, cond::persistency::TAG_LOG::HOST_NAME.name, cond::persistency::TAG_LOG::COMMAND.name, cond::persistency::TAG_LOG::ACTION.name, cond::persistency::TAG_LOG::USER_TEXT.name, core.autovars.NTupleCollection.name, BPHRecoBuilder::BPHRecoSource.name, BPHRecoBuilder::BPHCompSource.name, personalPlayback.FrameworkJob.name, plotscripts.SawTeethFunction.name, crabFunctions.CrabTask.name, hTMaxCell.name, cscdqm::ParHistoDef.name, BeautifulSoup.Tag.name, SummaryOutputProducer::GenericSummary.name, BeautifulSoup.SoupStrainer.name, dataset.Dataset.parentDataset(), and edm.print().

Referenced by dataset.Dataset.fileInfoList().

|

private |

Definition at line 421 of file dataset.py.

References dataset.Dataset.__cmssw, dataset.Dataset.__cmsswrelease, dataset.Dataset.__dasinstance, dataset.Dataset.__dataType, dataset.Dataset.__filename, dataset.Dataset.__findInJson(), dataset.Dataset.__getData(), dataset.Dataset.__inputMagneticField, dataset.Dataset.__name, dataset.Dataset.__predefined, edm.print(), python.rootplot.root2matplotlib.replace(), and digitizers_cfi.strip.

Referenced by dataset.Dataset.magneticField().

|

private |

For MC, this returns the same as the previous function. For data, it gets the magnetic field from the runs. This is important for deciding which template to use for offlinevalidation

Definition at line 502 of file dataset.py.

References dataset.Dataset.__dasinstance, dataset.Dataset.__dataType, dataset.Dataset.__filename, dataset.Dataset.__findInJson(), dataset.Dataset.__firstusedrun, dataset.Dataset.__getData(), dataset.Dataset.__getMagneticFieldForRun(), dataset.Dataset.__inputMagneticField, dataset.Dataset.__lastusedrun, dataset.Dataset.__magneticField, dataset.Dataset.__name, dataset.Dataset.__predefined, funct.abs(), objects.autophobj.float, edm.print(), python.rootplot.root2matplotlib.replace(), split, and digitizers_cfi.strip.

Referenced by dataset.Dataset.__getMagneticFieldForRun(), dataset.Dataset.dump_cff(), and dataset.Dataset.magneticFieldForRun().

|

private |

Definition at line 411 of file dataset.py.

References dataset.Dataset.__dasinstance, dataset.Dataset.__findInJson(), dataset.Dataset.__getData(), dataset.Dataset.__name, and str.

Referenced by dataset.Dataset.parentDataset().

|

private |

Definition at line 618 of file dataset.py.

References dataset.Dataset.__dasinstance, dataset.Dataset.__findInJson(), dataset.Dataset.__getData(), dataset.Dataset.__name, and edm.print().

Referenced by dataset.Dataset.__lumiSelectionSnippet(), dataset.Dataset.convertTimeToRun(), and dataset.Dataset.runList().

|

private |

Definition at line 123 of file dataset.py.

References dataset.Dataset.__chunks(), dataset.Dataset.__findInJson(), dataset.Dataset.__firstusedrun, dataset.Dataset.__getRunList(), dataset.Dataset.__inputMagneticField, dataset.Dataset.__lastusedrun, dataset.Dataset.getForceRunRangeFunction(), dataset.int, join(), list(), SiStripPI.max, min(), edm.print(), python.rootplot.root2matplotlib.replace(), split, and str.

Referenced by dataset.Dataset.__createSnippet().

| def dataset.Dataset.buildListOfBadFiles | ( | self | ) |

fills the list of bad files from the IntegrityCheck log. When the integrity check file is not available, files are considered as good.

Definition at line 277 of file dataset.py.

| def dataset.Dataset.buildListOfFiles | ( | self, | |

pattern = '.*root' |

|||

| ) |

fills list of files, taking all root files matching the pattern in the castor dir

Definition at line 273 of file dataset.py.

| def dataset.Dataset.convertTimeToRun | ( | self, | |

begin = None, |

|||

end = None, |

|||

firstRun = None, |

|||

lastRun = None, |

|||

shortTuple = True |

|||

| ) |

Definition at line 643 of file dataset.py.

References dataset.Dataset.__dasinstance, dataset.Dataset.__dateString(), dataset.Dataset.__datetime(), dataset.Dataset.__find_ge(), dataset.Dataset.__find_lt(), dataset.Dataset.__findInJson(), dataset.Dataset.__getData(), dataset.Dataset.__getRunList(), dataset.Dataset.__name, electrons_cff.bool, and dataset.int.

Referenced by dataset.Dataset.__createSnippet(), and dataset.Dataset.__dateString().

| def dataset.Dataset.createdatasetfile_hippy | ( | self, | |

| filename, | |||

| filesperjob, | |||

| firstrun, | |||

| lastrun | |||

| ) |

Definition at line 850 of file dataset.py.

References dataset.Dataset.__chunks(), dataset.Dataset.fileList(), and join().

| def dataset.Dataset.datasetSnippet | ( | self, | |

jsonPath = None, |

|||

begin = None, |

|||

end = None, |

|||

firstRun = None, |

|||

lastRun = None, |

|||

crab = False, |

|||

parent = False |

|||

| ) |

Definition at line 727 of file dataset.py.

References dataset.Dataset.__createSnippet(), dataset.Dataset.__filename, dataset.Dataset.__name, dataset.Dataset.__official, dataset.Dataset.__origName, dataset.Dataset.__predefined, dataset.Dataset.dump_cff(), and edm.print().

Referenced by dataset.Dataset.parentDataset().

| def dataset.Dataset.dataType | ( | self | ) |

Definition at line 708 of file dataset.py.

References dataset.Dataset.__dataType, and dataset.Dataset.__getDataType().

| def dataset.Dataset.dump_cff | ( | self, | |

outName = None, |

|||

jsonPath = None, |

|||

begin = None, |

|||

end = None, |

|||

firstRun = None, |

|||

lastRun = None, |

|||

parent = False |

|||

| ) |

Definition at line 789 of file dataset.py.

References dataset.Dataset.__cmssw, dataset.Dataset.__createSnippet(), dataset.Dataset.__dataType, dataset.Dataset.__getMagneticFieldForRun(), dataset.Dataset.__magneticField, dataset.Dataset.__name, edm.print(), python.rootplot.root2matplotlib.replace(), split, str, and digitizers_cfi.strip.

Referenced by dataset.Dataset.datasetSnippet().

| def dataset.Dataset.extractFileSizes | ( | self | ) |

Get the file size for each file, from the eos ls -l command.

Definition at line 308 of file dataset.py.

References dataset.EOSDataset.castorDir, and dataset.Dataset.castorDir.

Definition at line 912 of file dataset.py.

References dataset.Dataset.__dasLimit, and dataset.Dataset.__getFileInfoList().

Referenced by dataset.Dataset.fileList().

| def dataset.Dataset.fileList | ( | self, | |

parent = False, |

|||

firstRun = None, |

|||

lastRun = None, |

|||

forcerunselection = False |

|||

| ) |

Definition at line 883 of file dataset.py.

References dataset.Dataset.__findInJson(), dataset.Dataset.fileInfoList(), objects.autophobj.float, dataset.Dataset.getrunnumberfromfilename(), and edm.print().

Referenced by dataset.Dataset.__fileListSnippet(), and dataset.Dataset.createdatasetfile_hippy().

| def dataset.Dataset.forcerunrange | ( | self, | |

| firstRun, | |||

| lastRun, | |||

| s | |||

| ) |

s must be in the format run1:lum1-run2:lum2

Definition at line 324 of file dataset.py.

References dataset.Dataset.__firstusedrun, dataset.Dataset.__lastusedrun, dataset.int, and split.

Referenced by dataset.Dataset.getForceRunRangeFunction().

| def dataset.Dataset.getForceRunRangeFunction | ( | self, | |

| firstRun, | |||

| lastRun | |||

| ) |

Definition at line 349 of file dataset.py.

References dataset.Dataset.forcerunrange().

Referenced by dataset.Dataset.__lumiSelectionSnippet().

| def dataset.Dataset.getPrimaryDatasetEntries | ( | self | ) |

Definition at line 328 of file dataset.py.

References dataset.int, runall.testit.report, WorkFlowRunner.WorkFlowRunner.report, dataset.BaseDataset.report, and ALIUtils.report.

|

static |

Definition at line 856 of file dataset.py.

References Vispa.Plugins.EdmBrowser.EdmDataAccessor.all(), dataset.int, and join().

Referenced by dataset.Dataset.fileList().

| def dataset.Dataset.magneticField | ( | self | ) |

Definition at line 713 of file dataset.py.

References dataset.Dataset.__getMagneticField(), and dataset.Dataset.__magneticField.

| def dataset.Dataset.magneticFieldForRun | ( | self, | |

run = -1 |

|||

| ) |

Definition at line 718 of file dataset.py.

References dataset.Dataset.__getMagneticFieldForRun().

| def dataset.Dataset.name | ( | self | ) |

Definition at line 915 of file dataset.py.

References dataset.Dataset.__name.

Referenced by config.CFG.__str__(), validation.Sample.digest(), VIDSelectorBase.VIDSelectorBase.initialize(), and Vispa.Views.PropertyView.Property.valueChanged().

| def dataset.Dataset.parentDataset | ( | self | ) |

Definition at line 721 of file dataset.py.

References dataset.Dataset.__getParentDataset(), dataset.Dataset.__parentDataset, and dataset.Dataset.datasetSnippet().

Referenced by dataset.Dataset.__getFileInfoList().

| def dataset.Dataset.predefined | ( | self | ) |

| def dataset.Dataset.printInfo | ( | self | ) |

Definition at line 323 of file dataset.py.

References dataset.EOSDataset.castorDir, dataset.Dataset.castorDir, dataset.Dataset.lfnDir, ElectronMVAID.ElectronMVAID.name, counter.Counter.name, average.Average.name, histograms.Histograms.name, AlignableObjectId::entry.name, cond::persistency::TAG::NAME.name, TmModule.name, cond::persistency::GLOBAL_TAG::NAME.name, core.autovars.NTupleVariable.name, cond::persistency::RUN_INFO::RUN_NUMBER.name, cond::persistency::TAG::TIME_TYPE.name, cond::persistency::GLOBAL_TAG::VALIDITY.name, cond::persistency::RUN_INFO::START_TIME.name, cond::persistency::TAG::OBJECT_TYPE.name, cond::persistency::GLOBAL_TAG::DESCRIPTION.name, cond::persistency::RUN_INFO::END_TIME.name, cond::persistency::TAG::SYNCHRONIZATION.name, cond::persistency::GLOBAL_TAG::RELEASE.name, MEPSet.name, cond::persistency::TAG::END_OF_VALIDITY.name, cond::persistency::GLOBAL_TAG::SNAPSHOT_TIME.name, cond::persistency::GTEditorData.name, cond::persistency::TAG::DESCRIPTION.name, cond::persistency::GLOBAL_TAG::INSERTION_TIME.name, nanoaod::MergeableCounterTable::SingleColumn< T >.name, cond::persistency::TAG::LAST_VALIDATED_TIME.name, cond::persistency::TAG::INSERTION_TIME.name, cond::persistency::TAG::MODIFICATION_TIME.name, preexistingValidation.PreexistingValidation.name, FWTGeoRecoGeometry::Info.name, Types._Untracked.name, dataset.BaseDataset.name, OutputMEPSet.name, personalPlayback.Applet.name, ParameterSet.name, PixelDCSObject< T >::Item.name, DQMRivetClient::LumiOption.name, MagCylinder.name, analyzer.Analyzer.name, ParSet.name, DQMRivetClient::ScaleFactorOption.name, EgHLTOfflineSummaryClient::SumHistBinData.name, SingleObjectCondition.name, cond::persistency::GTProxyData.name, core.autovars.NTupleObjectType.name, MyWatcher.name, edm::PathTimingSummary.name, nanoaod::MergeableCounterTable::VectorColumn< T >.name, cms::DDAlgoArguments.name, cond::TimeTypeSpecs.name, lumi::TriggerInfo.name, alignment.Alignment.name, edm::PathSummary.name, PixelEndcapLinkMaker::Item.name, cond::persistency::GLOBAL_TAG_MAP::GLOBAL_TAG_NAME.name, perftools::EdmEventSize::BranchRecord.name, DQMGenericClient::EfficOption.name, FWTableViewManager::TableEntry.name, cond::persistency::GLOBAL_TAG_MAP::RECORD.name, PixelBarrelLinkMaker::Item.name, EcalLogicID.name, cond::persistency::GLOBAL_TAG_MAP::LABEL.name, validateAlignments.ParallelMergeJob.name, MEtoEDM< T >::MEtoEDMObject.name, cond::persistency::GLOBAL_TAG_MAP::TAG_NAME.name, ExpressionHisto< T >.name, XMLProcessor::_loaderBaseConfig.name, cond::persistency::PAYLOAD::HASH.name, cond::persistency::PAYLOAD::OBJECT_TYPE.name, cond::persistency::PAYLOAD::DATA.name, genericValidation.GenericValidation.name, TreeCrawler.Package.name, cond::persistency::PAYLOAD::STREAMER_INFO.name, MagGeoBuilderFromDDD::volumeHandle.name, cond::persistency::PAYLOAD::VERSION.name, cond::persistency::PAYLOAD::INSERTION_TIME.name, options.ConnectionHLTMenu.name, DQMGenericClient::ProfileOption.name, dqmoffline::l1t::HistDefinition.name, DQMGenericClient::NormOption.name, emtf::Node.name, h4DSegm.name, PhysicsTools::Calibration::Variable.name, FastHFShowerLibrary.name, core.TriggerMatchAnalyzer.TriggerMatchAnalyzer.name, DQMGenericClient::CDOption.name, CounterChecker.name, cond::TagInfo_t.name, looper.Looper.name, DQMGenericClient::NoFlowOption.name, cond::persistency::IOV::TAG_NAME.name, EDMtoMEConverter.name, cond::persistency::IOV::SINCE.name, TrackerSectorStruct.name, Mapper::definition< ScannerT >.name, cond::persistency::IOV::PAYLOAD_HASH.name, cond::persistency::IOV::INSERTION_TIME.name, classes.MonitorData.name, HistogramManager.name, MuonGeometrySanityCheckPoint.name, classes.OutputData.name, options.HLTProcessOptions.name, h2DSegm.name, core.TriggerBitAnalyzer.TriggerBitAnalyzer.name, nanoaod::FlatTable::Column.name, config.Analyzer.name, geometry.Structure.name, core.autovars.NTupleSubObject.name, DQMNet::WaitObject.name, AlpgenParameterName.name, SiStripMonitorDigi.name, core.autovars.NTupleObject.name, config.Service.name, cond::persistency::TAG_LOG::TAG_NAME.name, cond::persistency::TAG_LOG::EVENT_TIME.name, cond::persistency::TAG_LOG::USER_NAME.name, cond::persistency::TAG_LOG::HOST_NAME.name, cond::persistency::TAG_LOG::COMMAND.name, cond::persistency::TAG_LOG::ACTION.name, cond::persistency::TAG_LOG::USER_TEXT.name, core.autovars.NTupleCollection.name, BPHRecoBuilder::BPHRecoSource.name, BPHRecoBuilder::BPHCompSource.name, personalPlayback.FrameworkJob.name, plotscripts.SawTeethFunction.name, crabFunctions.CrabTask.name, hTMaxCell.name, cscdqm::ParHistoDef.name, BeautifulSoup.Tag.name, SummaryOutputProducer::GenericSummary.name, BeautifulSoup.SoupStrainer.name, and edm.print().

| def dataset.Dataset.runList | ( | self | ) |

Definition at line 922 of file dataset.py.

References dataset.Dataset.__getRunList(), and edm.print().

|

private |

Definition at line 26 of file dataset.py.

Referenced by dataset.Dataset.__getMagneticField(), and dataset.Dataset.dump_cff().

|

private |

Definition at line 27 of file dataset.py.

Referenced by dataset.Dataset.__getMagneticField().

|

private |

Definition at line 25 of file dataset.py.

Referenced by dataset.Dataset.__getDataType(), dataset.Dataset.__getFileInfoList(), dataset.Dataset.__getMagneticField(), dataset.Dataset.__getMagneticFieldForRun(), dataset.Dataset.__getParentDataset(), dataset.Dataset.__getRunList(), and dataset.Dataset.convertTimeToRun().

|

private |

Definition at line 24 of file dataset.py.

Referenced by dataset.Dataset.fileInfoList().

|

private |

Definition at line 83 of file dataset.py.

Referenced by dataset.Dataset.__getMagneticField(), dataset.Dataset.__getMagneticFieldForRun(), dataset.Dataset.dataType(), and dataset.Dataset.dump_cff().

|

staticprivate |

Definition at line 111 of file dataset.py.

Referenced by dataset.Dataset.__createSnippet().

|

private |

Definition at line 53 of file dataset.py.

Referenced by dataset.Dataset.__getDataType(), dataset.Dataset.__getMagneticField(), dataset.Dataset.__getMagneticFieldForRun(), dataset.Dataset.datasetSnippet(), csvReporter.csvReporter.writeRow(), and csvReporter.csvReporter.writeRows().

|

private |

Definition at line 28 of file dataset.py.

Referenced by dataset.Dataset.__getMagneticFieldForRun(), dataset.Dataset.__lumiSelectionSnippet(), and dataset.Dataset.forcerunrange().

|

private |

Definition at line 81 of file dataset.py.

Referenced by dataset.Dataset.__getMagneticField(), dataset.Dataset.__getMagneticFieldForRun(), and dataset.Dataset.__lumiSelectionSnippet().

|

private |

Definition at line 29 of file dataset.py.

Referenced by dataset.Dataset.__getMagneticFieldForRun(), dataset.Dataset.__lumiSelectionSnippet(), and dataset.Dataset.forcerunrange().

|

private |

Definition at line 84 of file dataset.py.

Referenced by dataset.Dataset.__getMagneticFieldForRun(), dataset.Dataset.dump_cff(), and dataset.Dataset.magneticField().

|

private |

Definition at line 22 of file dataset.py.

Referenced by dataset.Dataset.__fileListSnippet(), dataset.Dataset.__getDataType(), dataset.Dataset.__getFileInfoList(), dataset.Dataset.__getMagneticField(), dataset.Dataset.__getMagneticFieldForRun(), dataset.Dataset.__getParentDataset(), dataset.Dataset.__getRunList(), dataset.Dataset.convertTimeToRun(), dataset.Dataset.datasetSnippet(), dataset.Dataset.dump_cff(), Config.Process.dumpConfig(), Config.Process.dumpPython(), genericValidation.ValidationWithPlotsSummaryBase.SummaryItem.name(), dataset.Dataset.name(), and Config.Process.name_().

|

private |

Definition at line 34 of file dataset.py.

Referenced by dataset.Dataset.datasetSnippet().

|

private |

Definition at line 23 of file dataset.py.

Referenced by dataset.Dataset.datasetSnippet().

|

private |

Definition at line 30 of file dataset.py.

Referenced by dataset.Dataset.parentDataset().

|

private |

Definition at line 50 of file dataset.py.

Referenced by dataset.Dataset.__getDataType(), dataset.Dataset.__getFileInfoList(), dataset.Dataset.__getMagneticField(), dataset.Dataset.__getMagneticFieldForRun(), dataset.Dataset.datasetSnippet(), and dataset.Dataset.predefined().

|

staticprivate |

Definition at line 93 of file dataset.py.

Referenced by dataset.Dataset.__createSnippet().

| dataset.Dataset.bad_files |

Definition at line 284 of file dataset.py.

| dataset.Dataset.castorDir |

Definition at line 268 of file dataset.py.

Referenced by dataset.Dataset.extractFileSizes(), and dataset.Dataset.printInfo().

| dataset.Dataset.files |

Definition at line 275 of file dataset.py.

| dataset.Dataset.filesAndSizes |

Definition at line 313 of file dataset.py.

| dataset.Dataset.good_files |

Definition at line 285 of file dataset.py.

| dataset.Dataset.lfnDir |

Definition at line 267 of file dataset.py.

Referenced by dataset.Dataset.printInfo().

| dataset.Dataset.maskExists |

Definition at line 269 of file dataset.py.

| dataset.Dataset.report |

Definition at line 270 of file dataset.py.

Referenced by addOnTests.testit.run().

1.8.11

1.8.11